Deepfake Scammers

Table of Contents

Introduction

As of May 2024, we continue to witness the revolution and development of generative artificial intelligence with both excitement and concern. OpenAI showcased to the world in a launch event that with their newly announced GPT-4o, ChatGPT can engage in dialogue much more closely resembling human interaction, understanding and producing content from voice, text, and images. On the other hand, not wanting to fall behind in the AI wars, Google shared a new feature from its own generative AI, Gemini, shortly after OpenAI. With this feature, Gemini could instantly convey to the user what was happening in the surroundings and the current location through the camera application.

Well, if you ask what worries cybersecurity professionals and researchers the most alongside these developments that we follow with great excitement and curiosity, in my opinion, it can be how much these features facilitate or hinder fraud attempts and cyber attacks by scammers and threat actors. Just as with humanoid robots, the development in generative artificial intelligence is often compared to human or near-human productivity, which means that malicious individuals also benefit greatly, especially in creating visual, video, audio, and text deepfakes. OpenAI CEO Sam ALTMAN seems to share my concerns, as he recently announced that they are working on a tool to detect deepfake images created with OpenAI’s DALL-E 3 model.

What is Deepfake?

Deepfakes are synthetic media that have been digitally manipulated to replace one person’s likeness convincingly with that of another. Coined in 2017 by a Reddit user, the term has been expanded to include other digital creations such as realistic images of human subjects that do not exist in real life. While the act of creating fake content is not new, deepfakes leverage tools and techniques from machine learning and artificial intelligence, including facial recognition algorithms and artificial neural networks such as variational autoencoders (VAEs) and generative adversarial networks (GANs). In turn the field of image forensics develops techniques to detect manipulated images. (Source: Wikipedia)

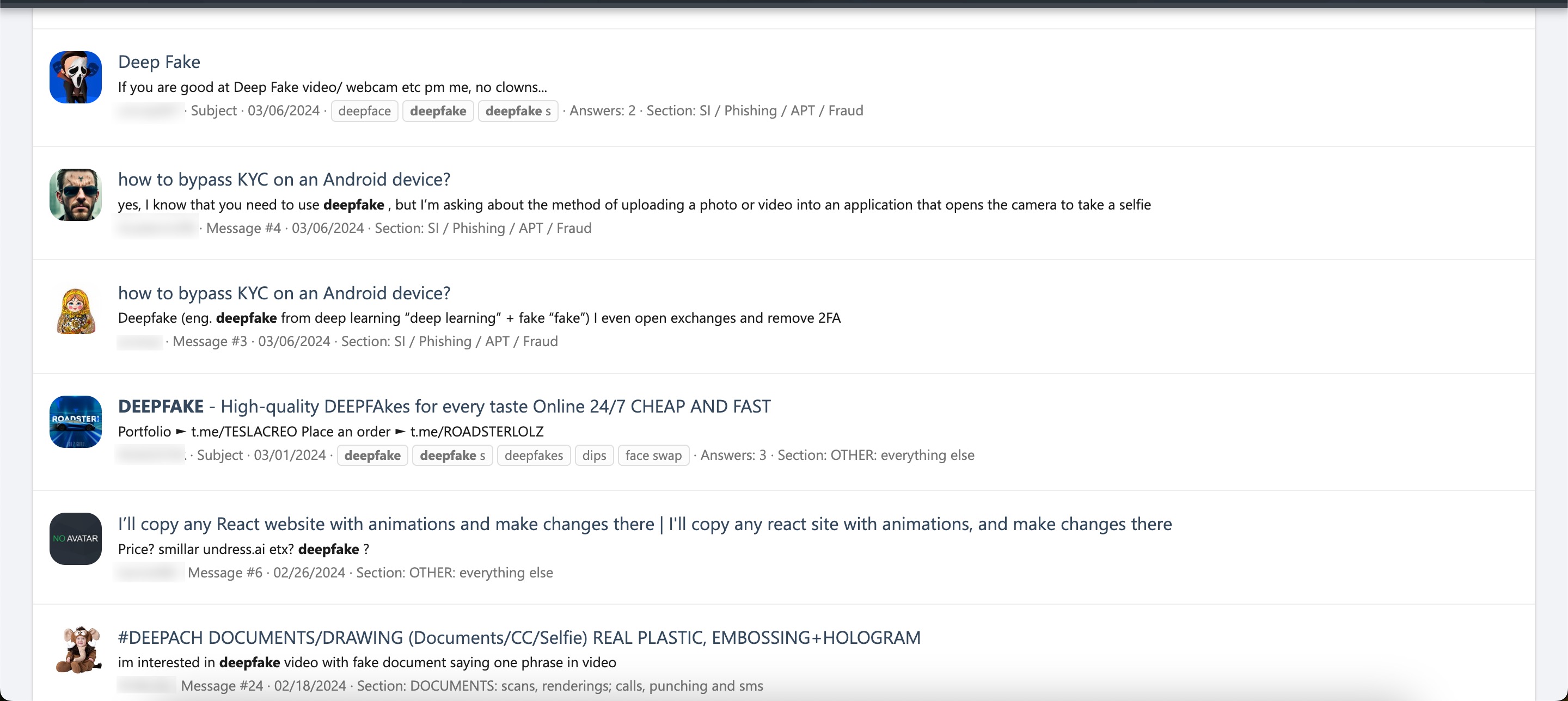

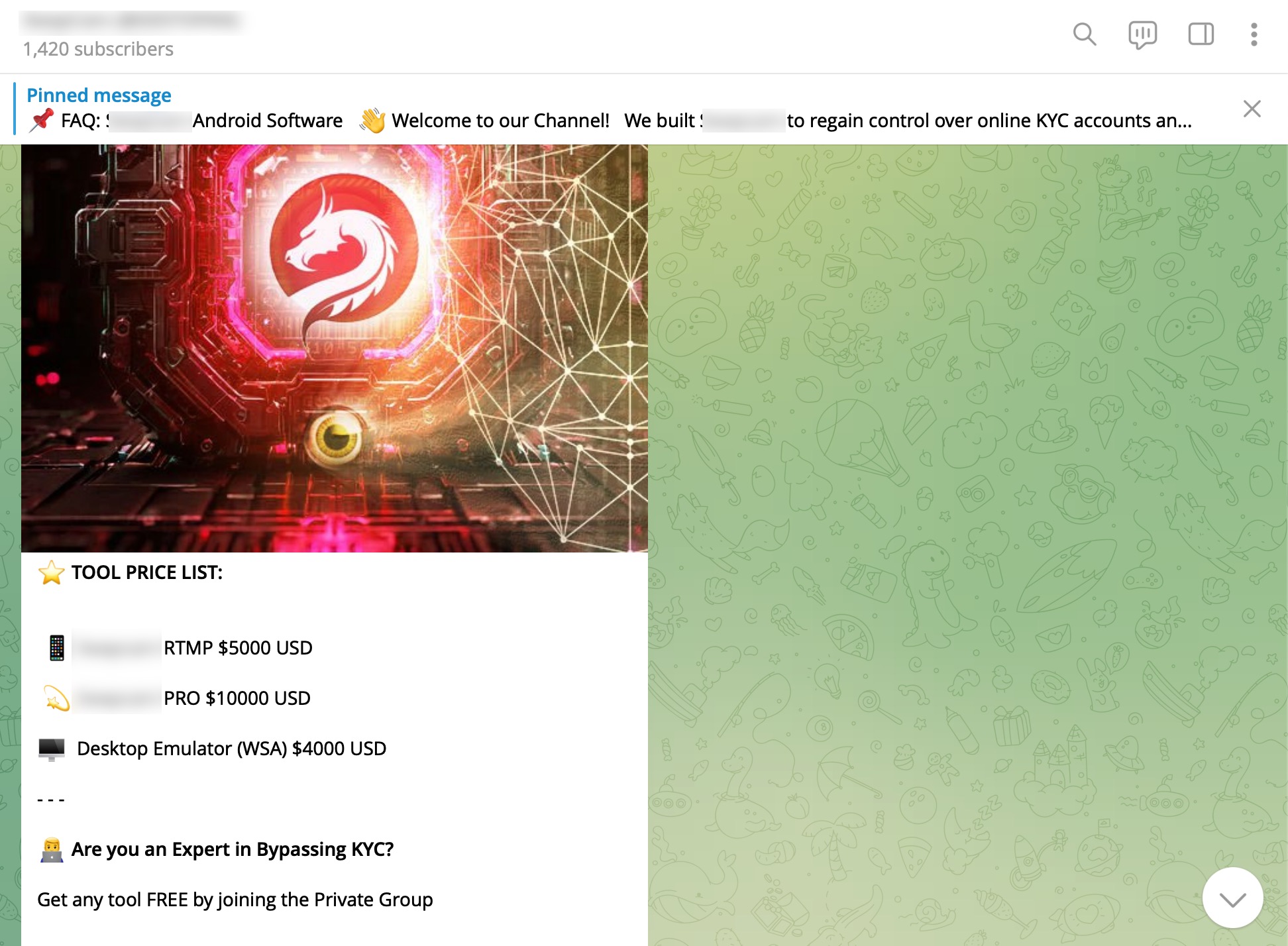

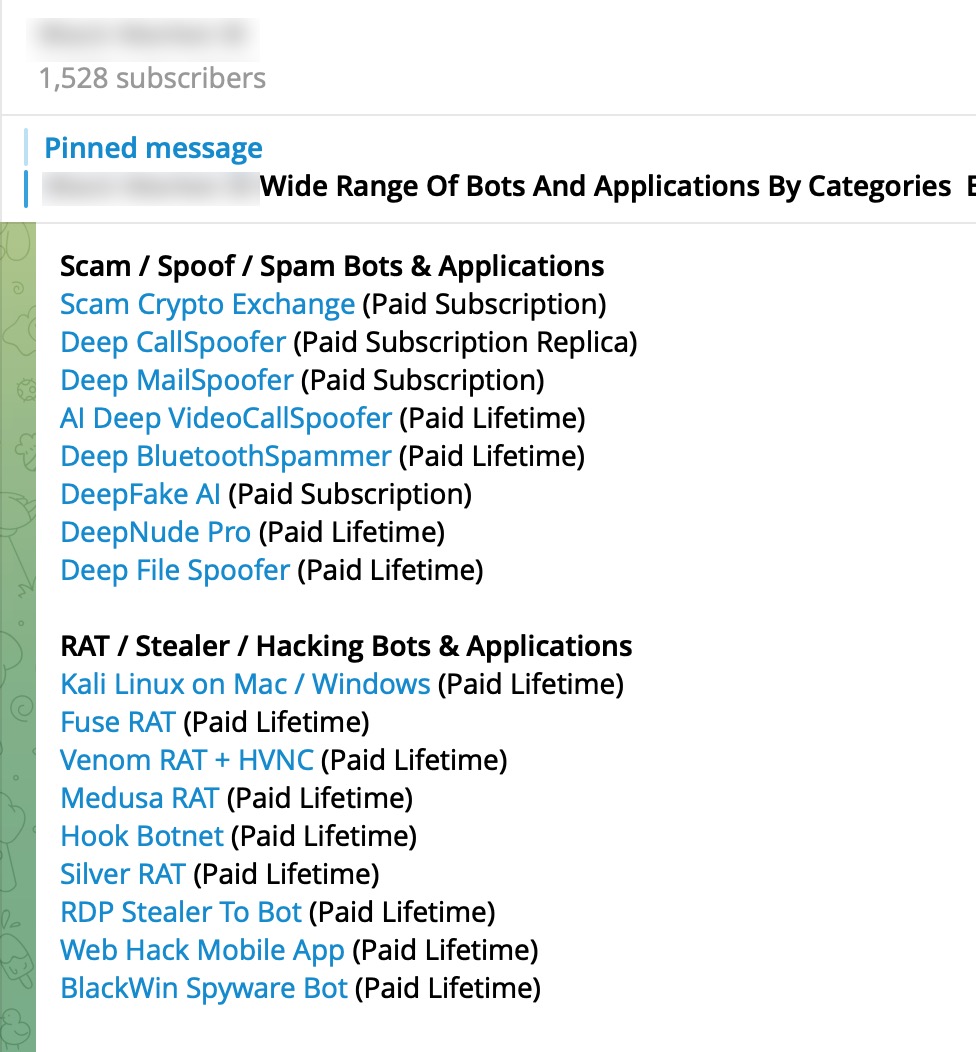

We have seen that deepfakes have been frequently used for disinformation especially during the Russia-Ukraine war, fraud, and parody purposes in recent years. On the other hand, when we look at forums and Telegram channels where scammers and threat actors are frequently present, it is noticeable that messages shared about deepfakes and fraud services have increased. Naturally, this increase also raises the risk for individuals spending time on social networks to fall victim to such frauds and cyber attacks.

The Deepfake Gang

If you work at or receive services from a cyber threat intelligence firm like SOCRadar, which tracks the activities of threat actors and scammers and provides 24/7 intelligence to its clients, you have the opportunity to closely follow and stay informed about the suspicious and harmful activities carried out by malicious individuals across various platforms.

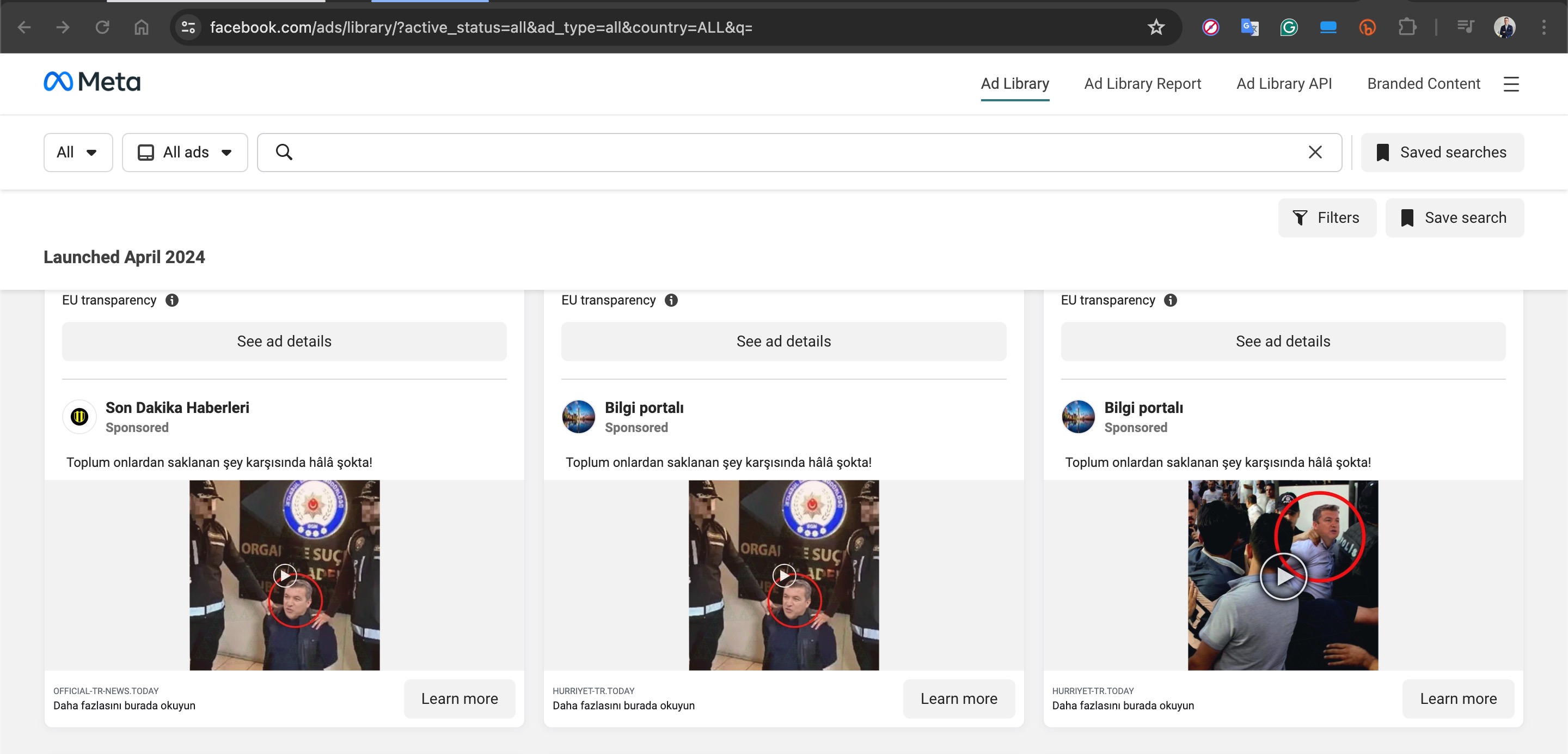

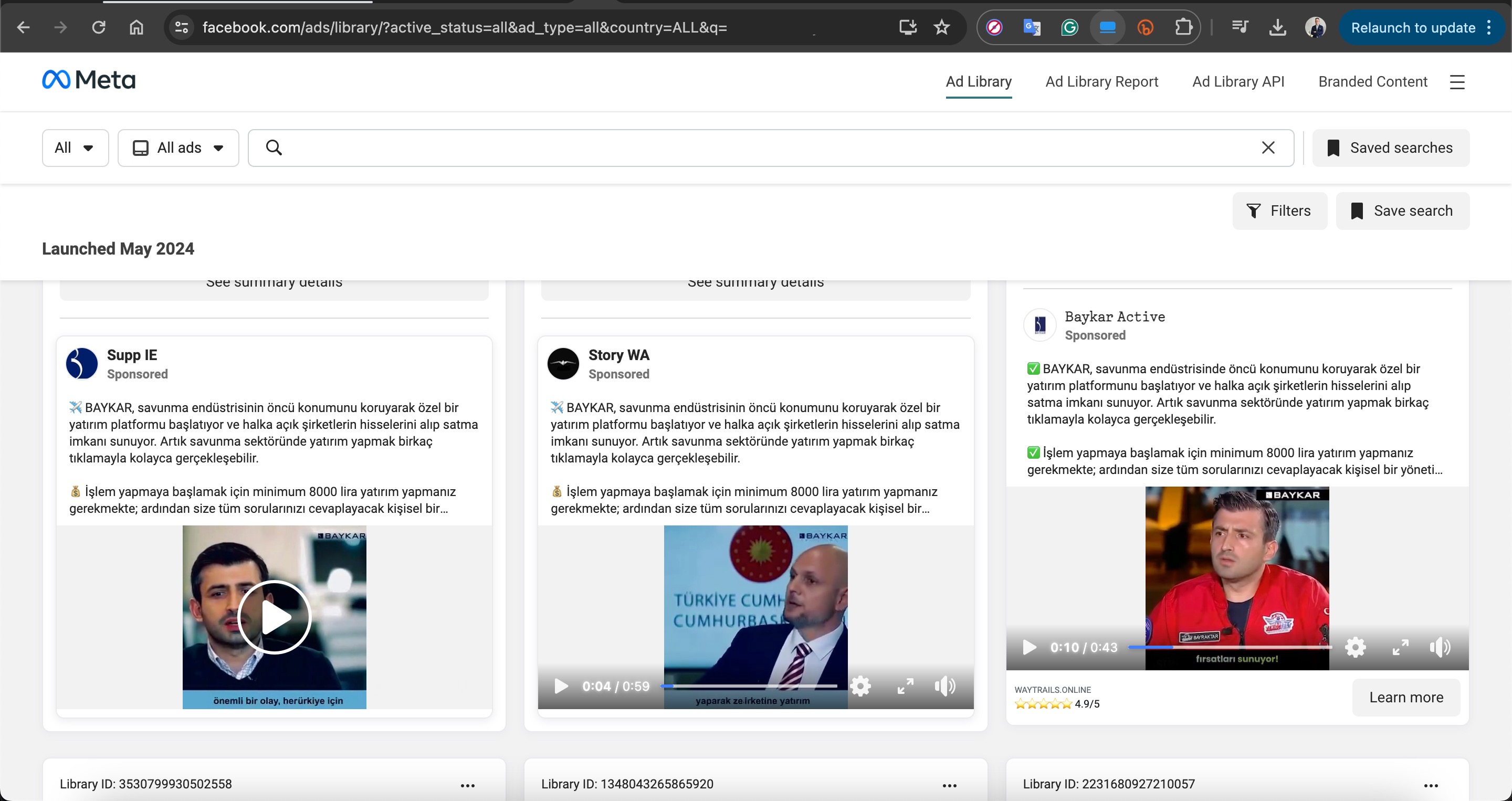

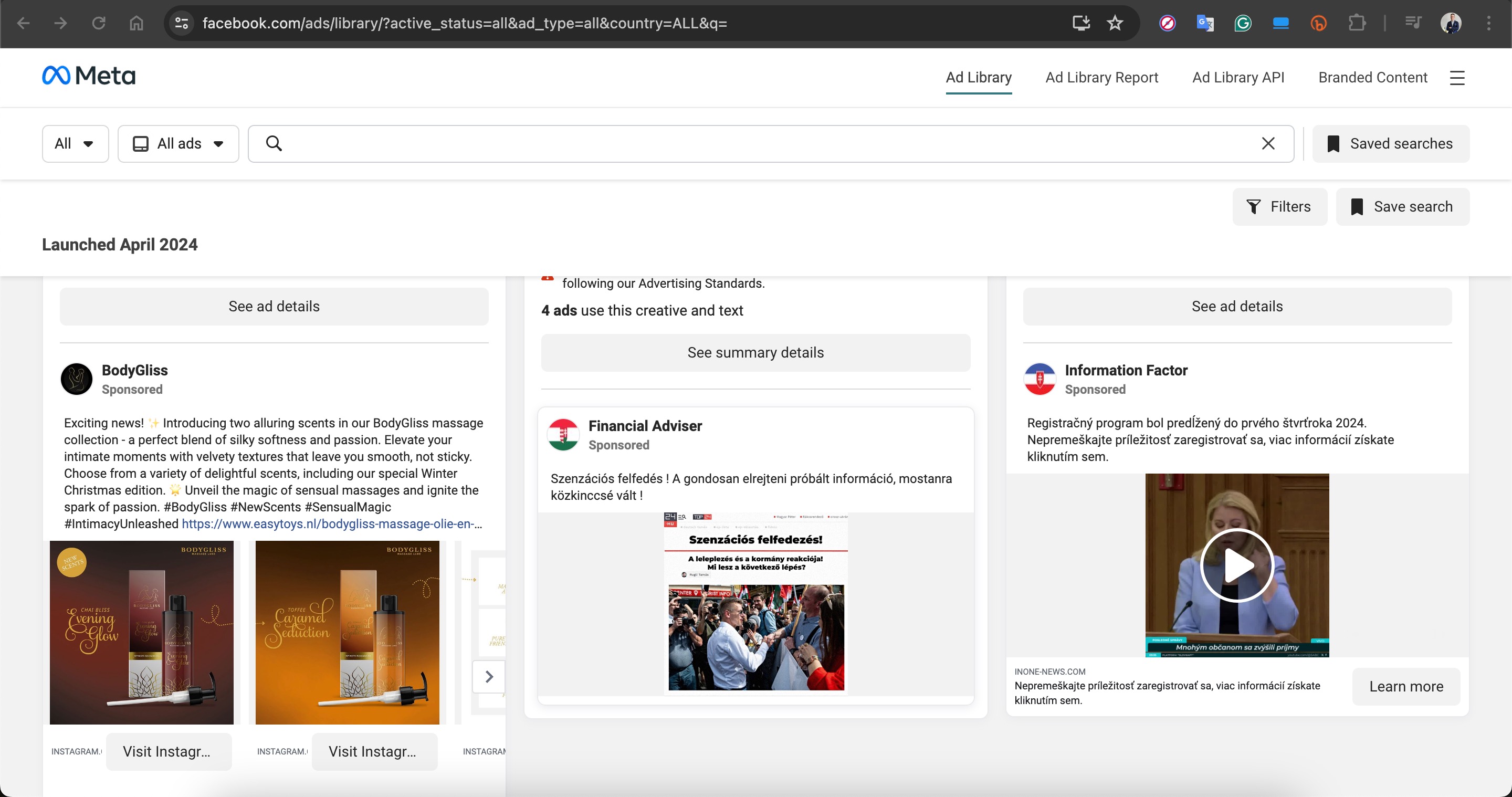

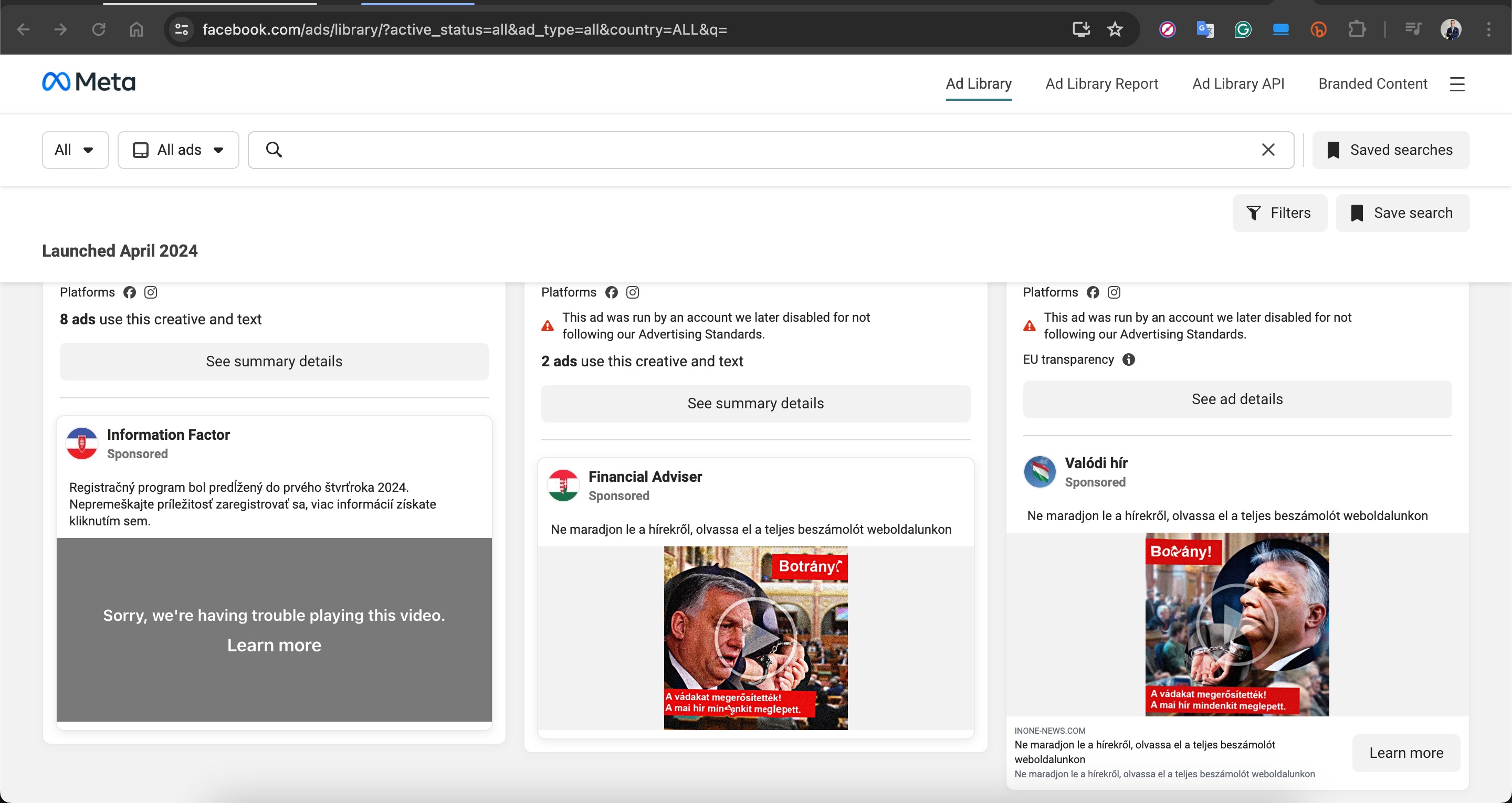

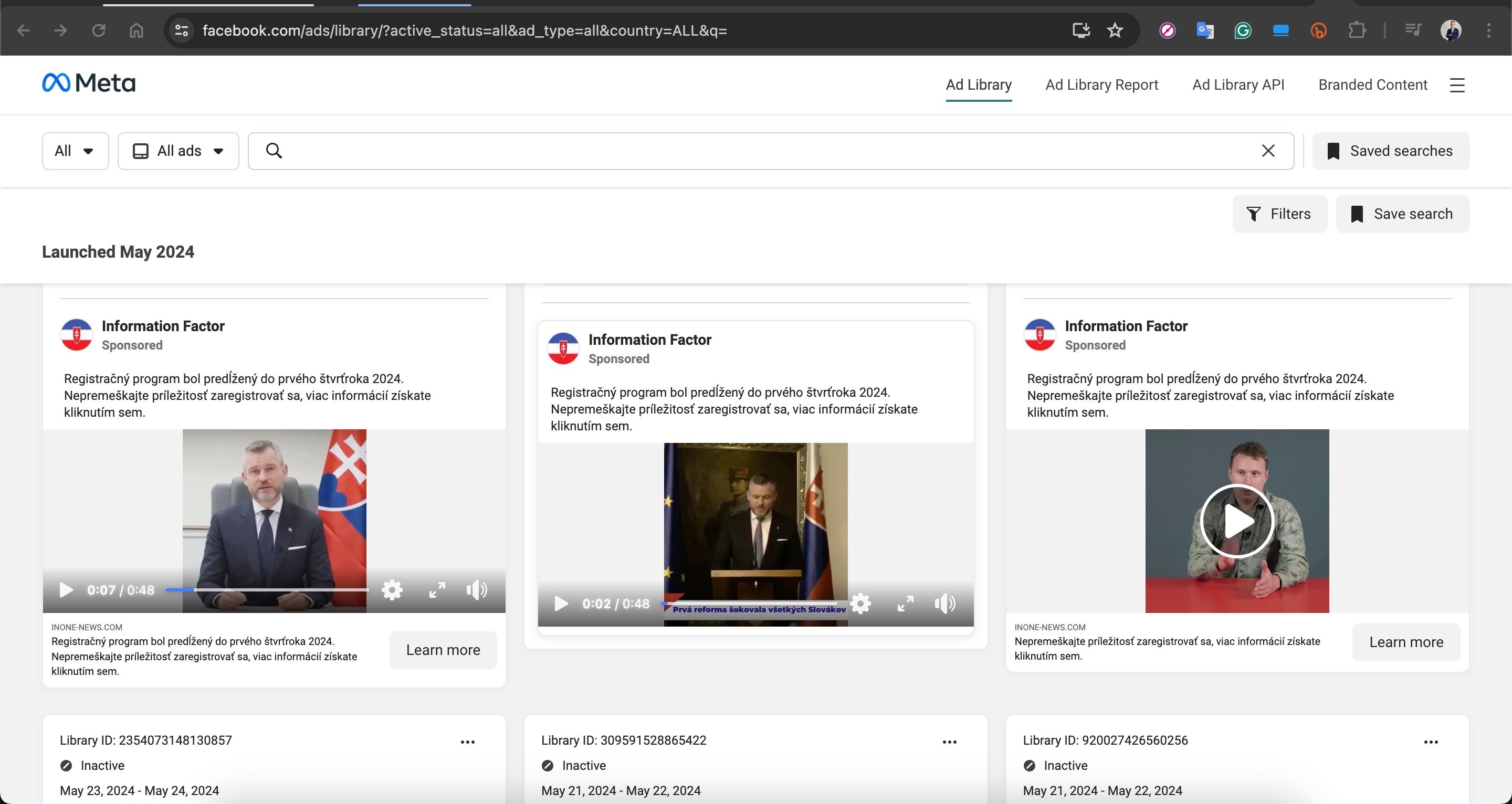

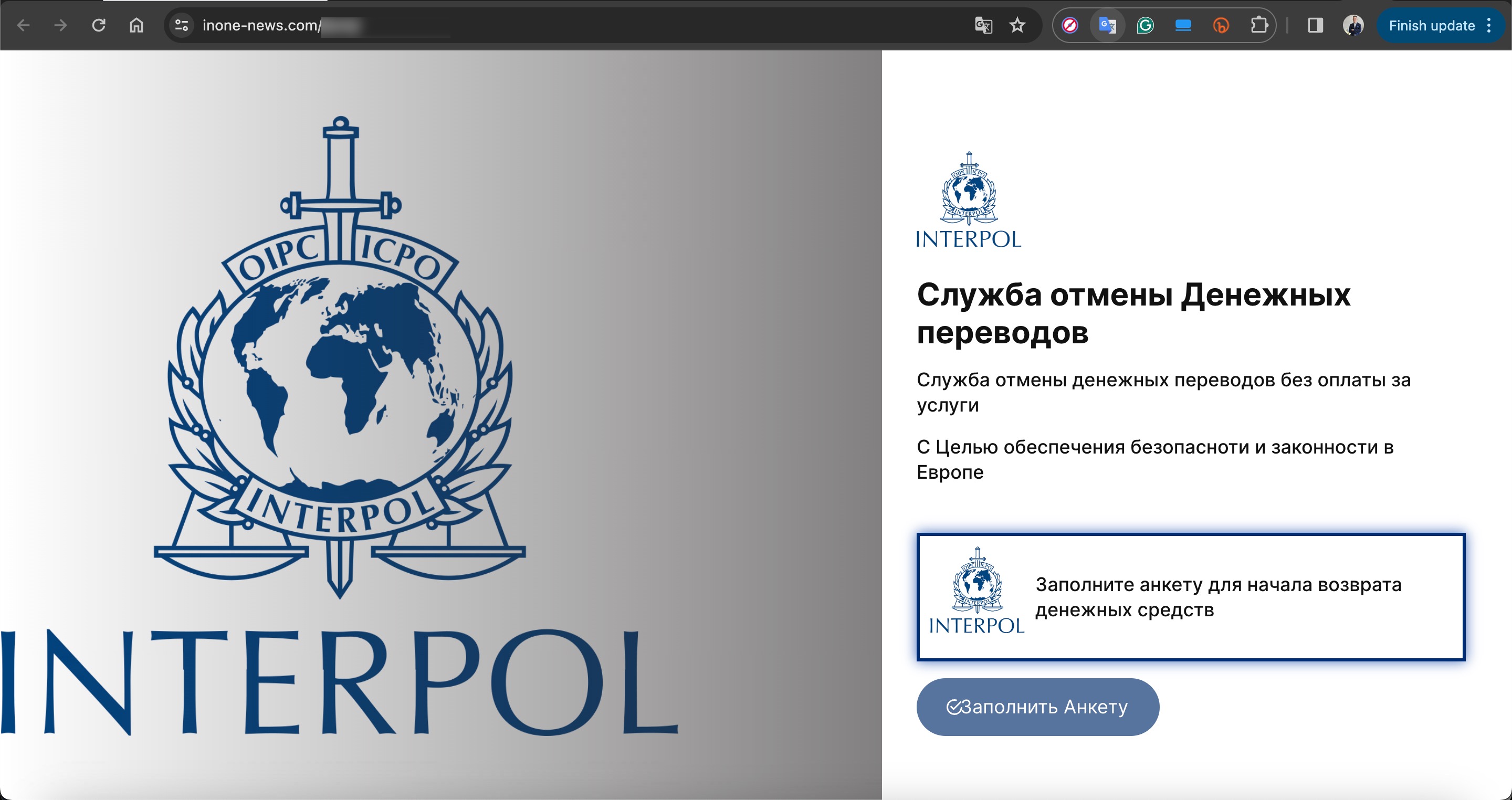

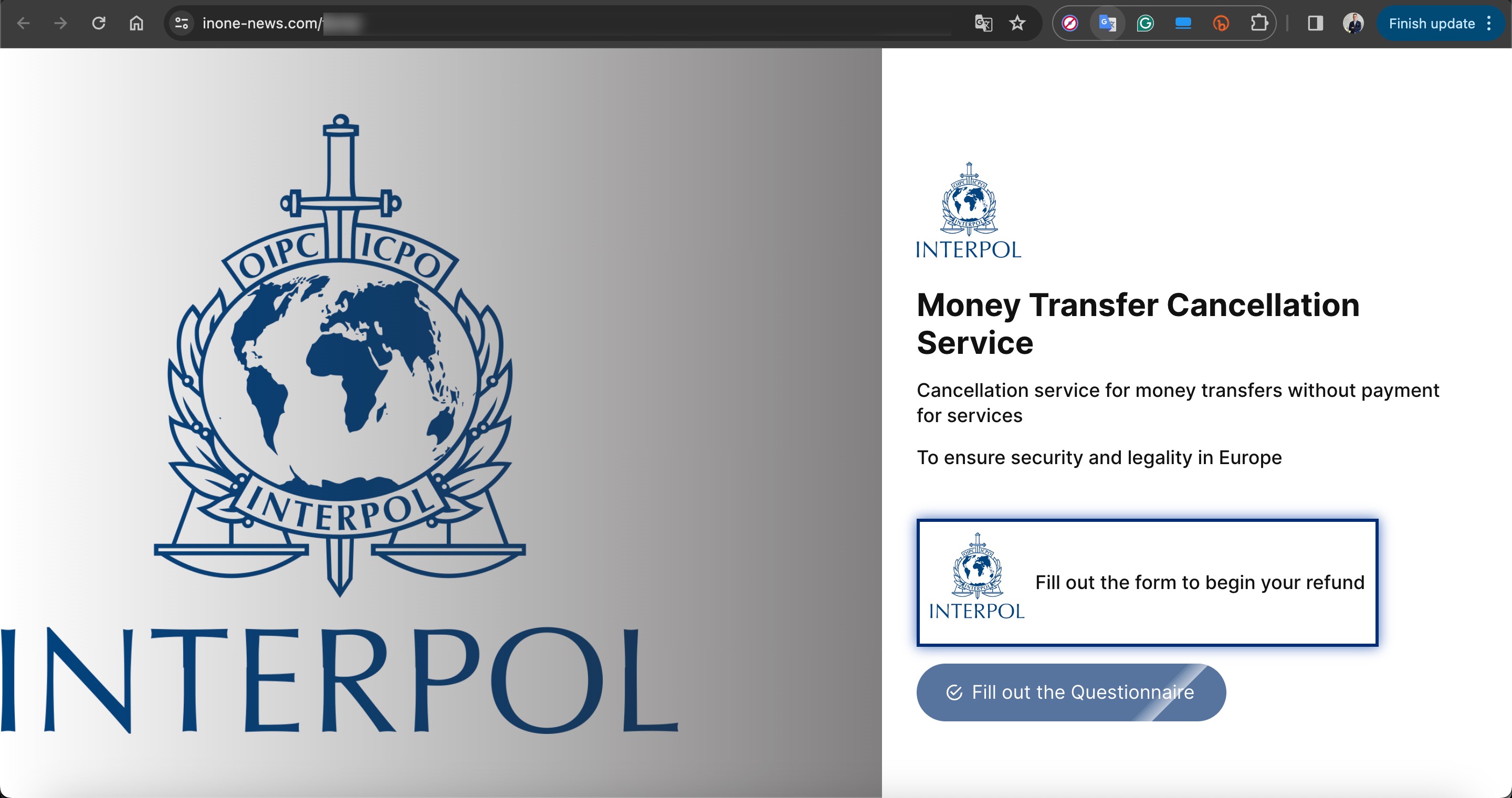

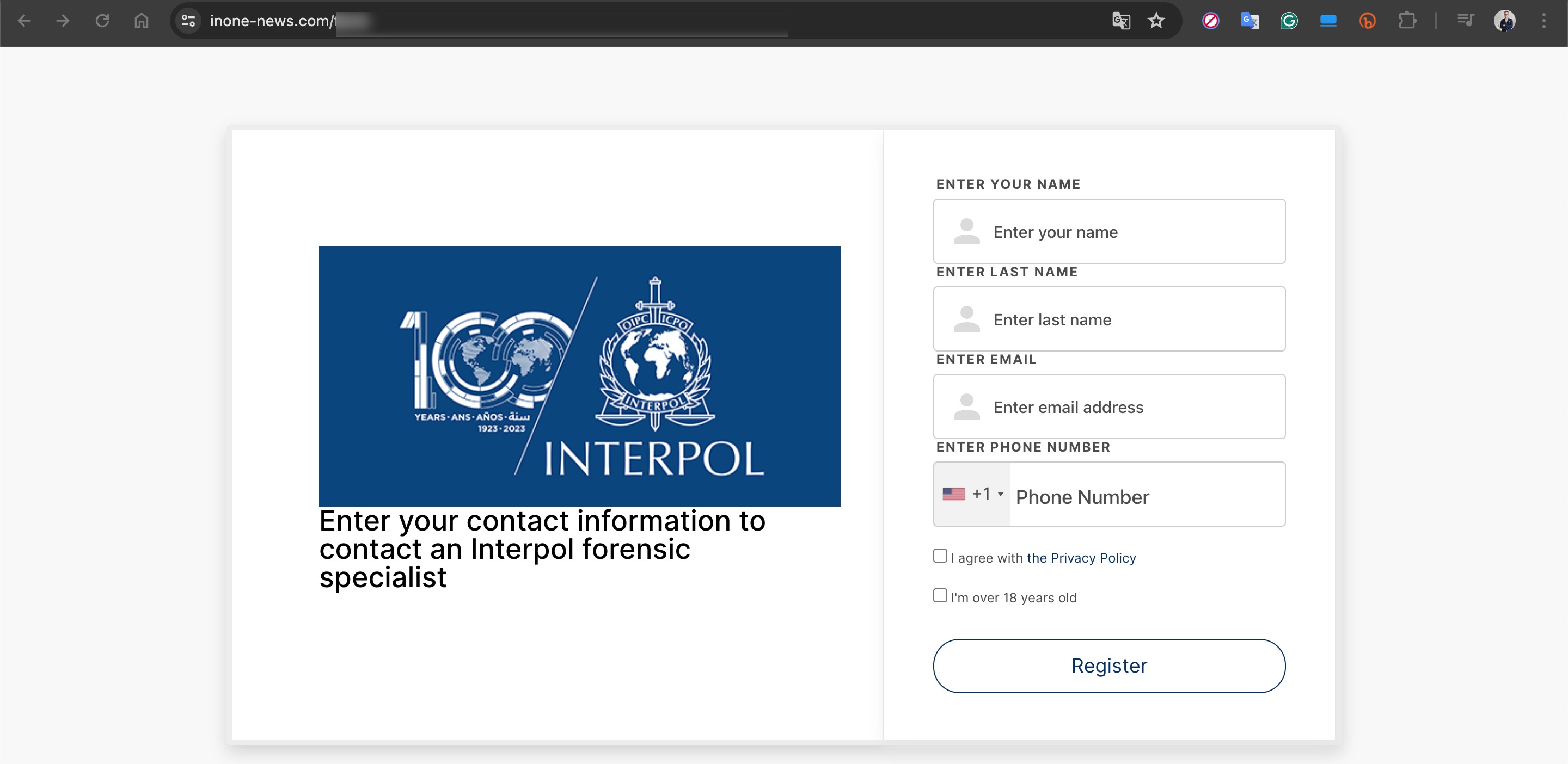

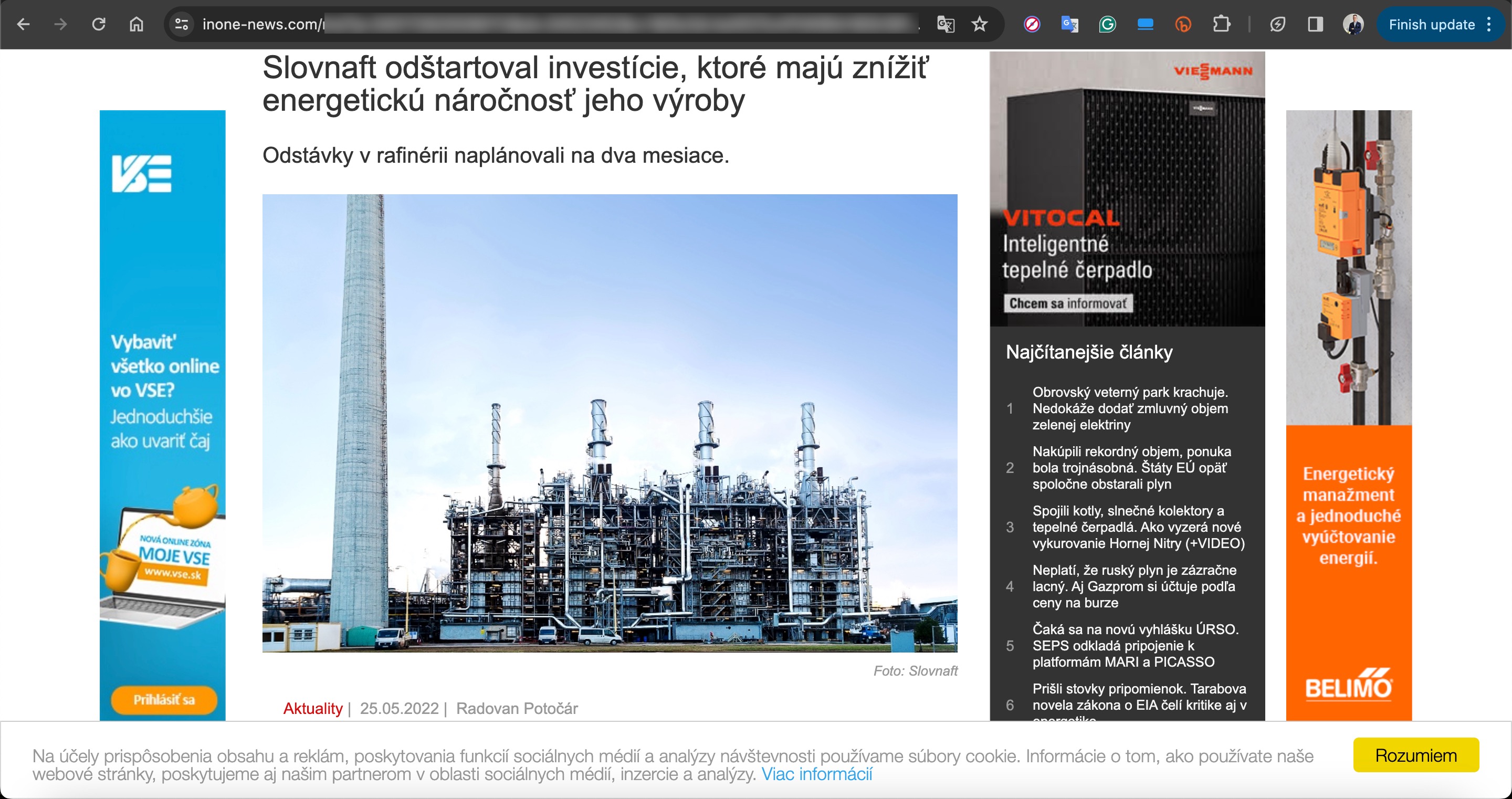

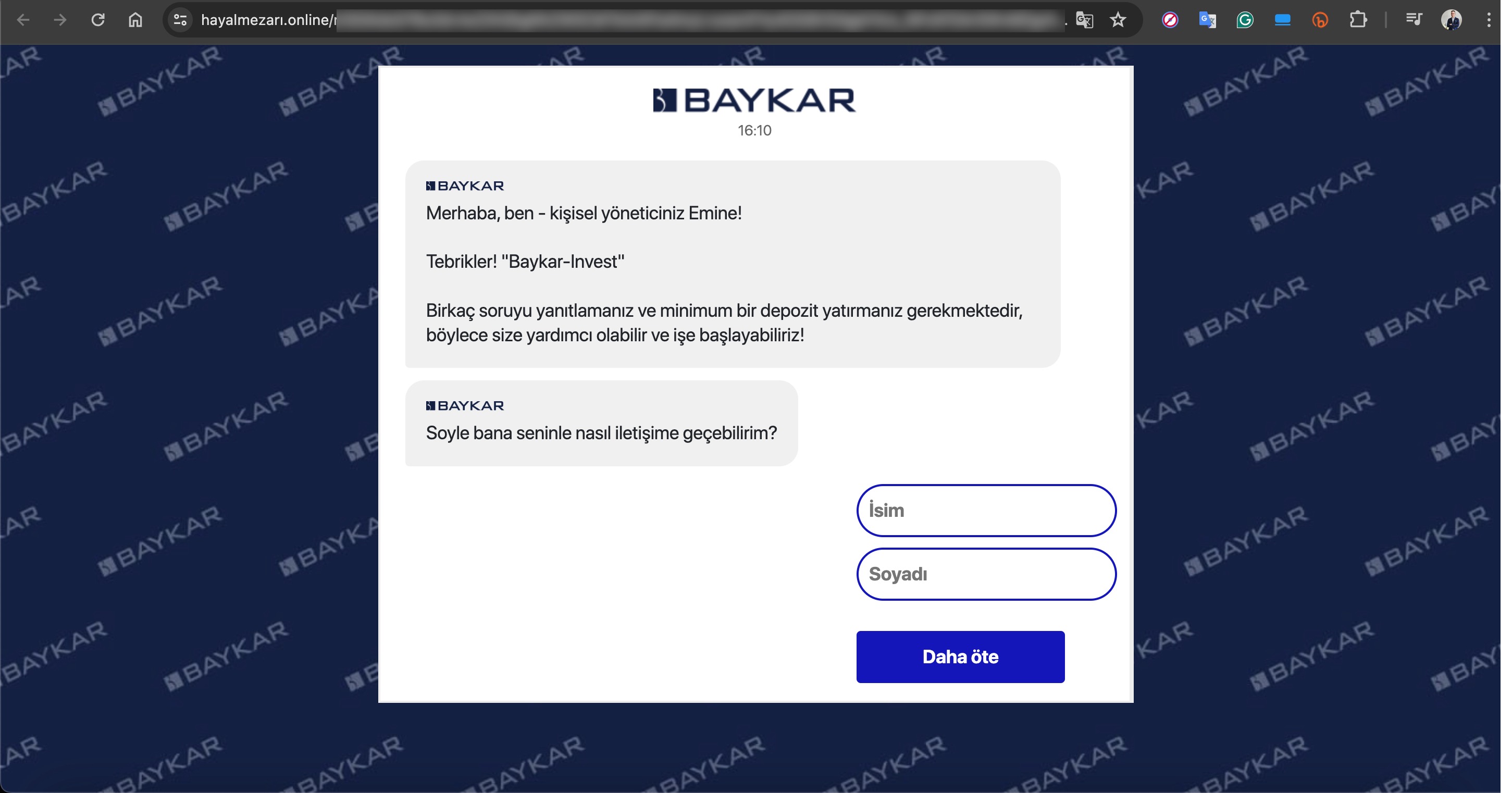

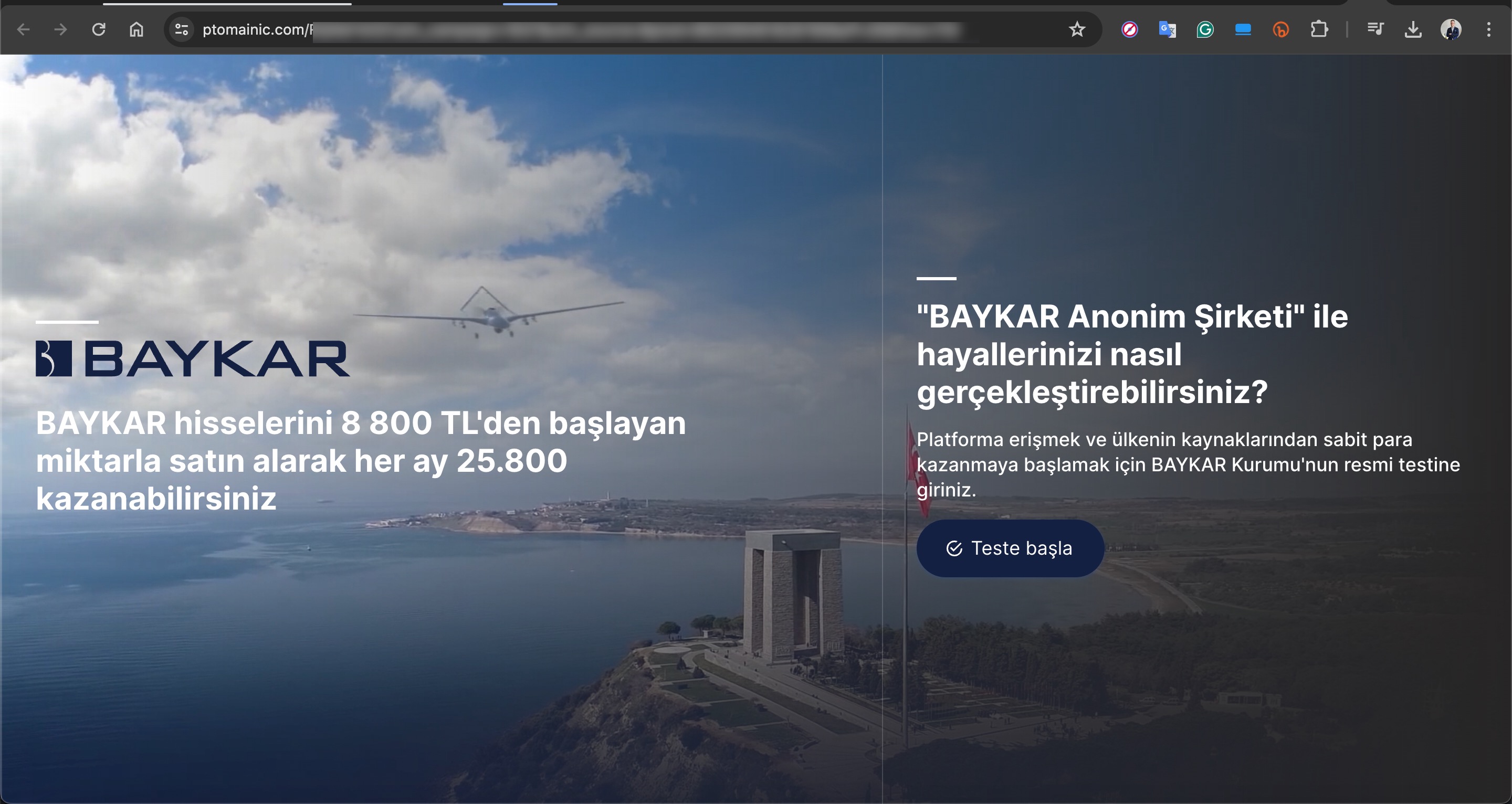

Based on information I recently obtained, I began closely monitoring a fraud gang that I believe to be based in Russia, with the technical details of their operations to be covered in another article. Since October 2023, this gang has been attempting fraud by using the names of international organizations such as Slovnaft, INA d.d, Bosphorus Gaz, and defense companies like Baykar, as well as Interpol. They targeted their victims through fake ads on Facebook, Instagram, Messenger, which are platforms under Meta. Occasionally, they also placed fake ads on Google‘s search page. To ensnare the citizens of their targeted countries, this gang did not hesitate to use deepfake images and videos of politicians, business people, and news anchors.

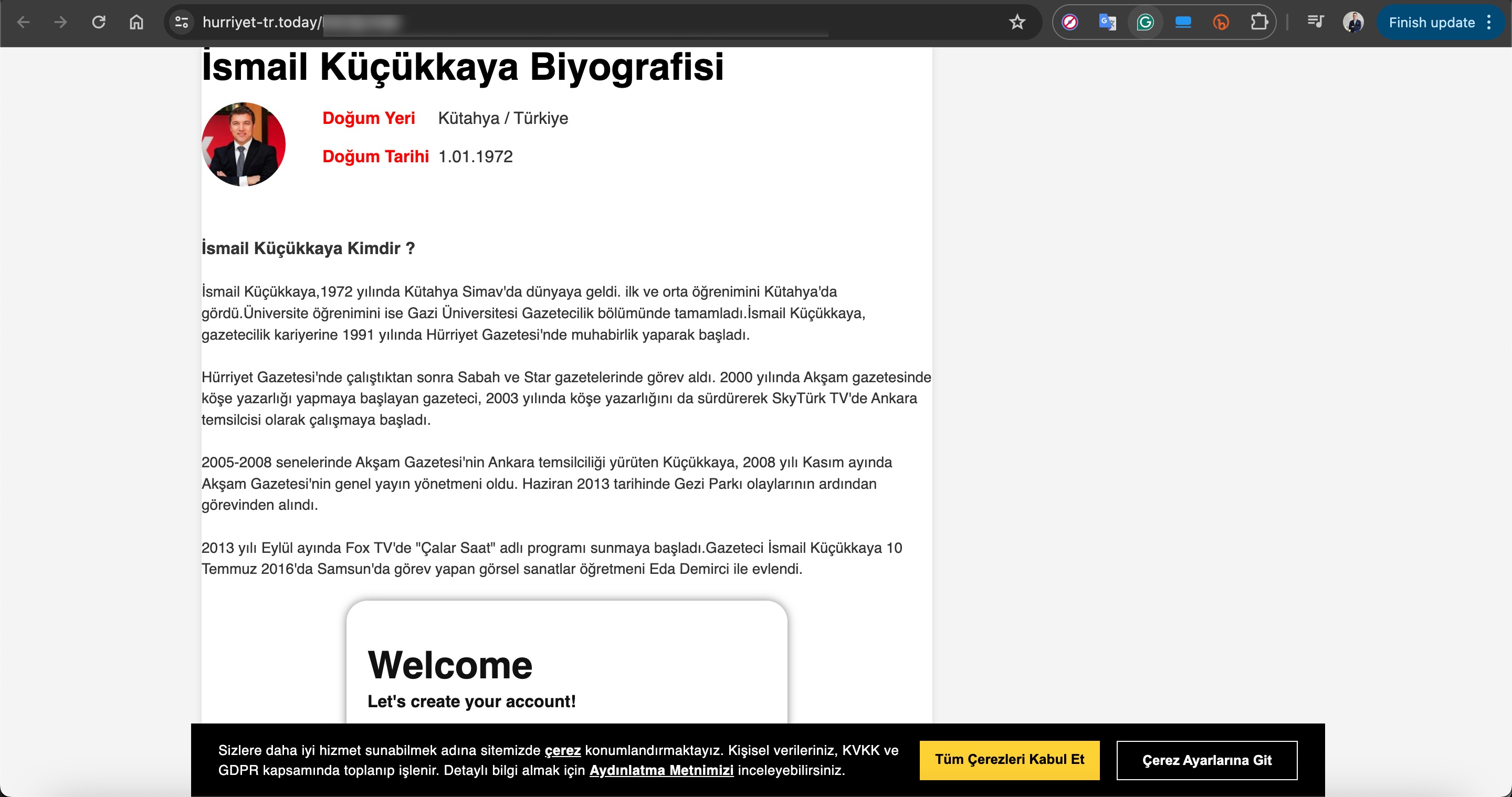

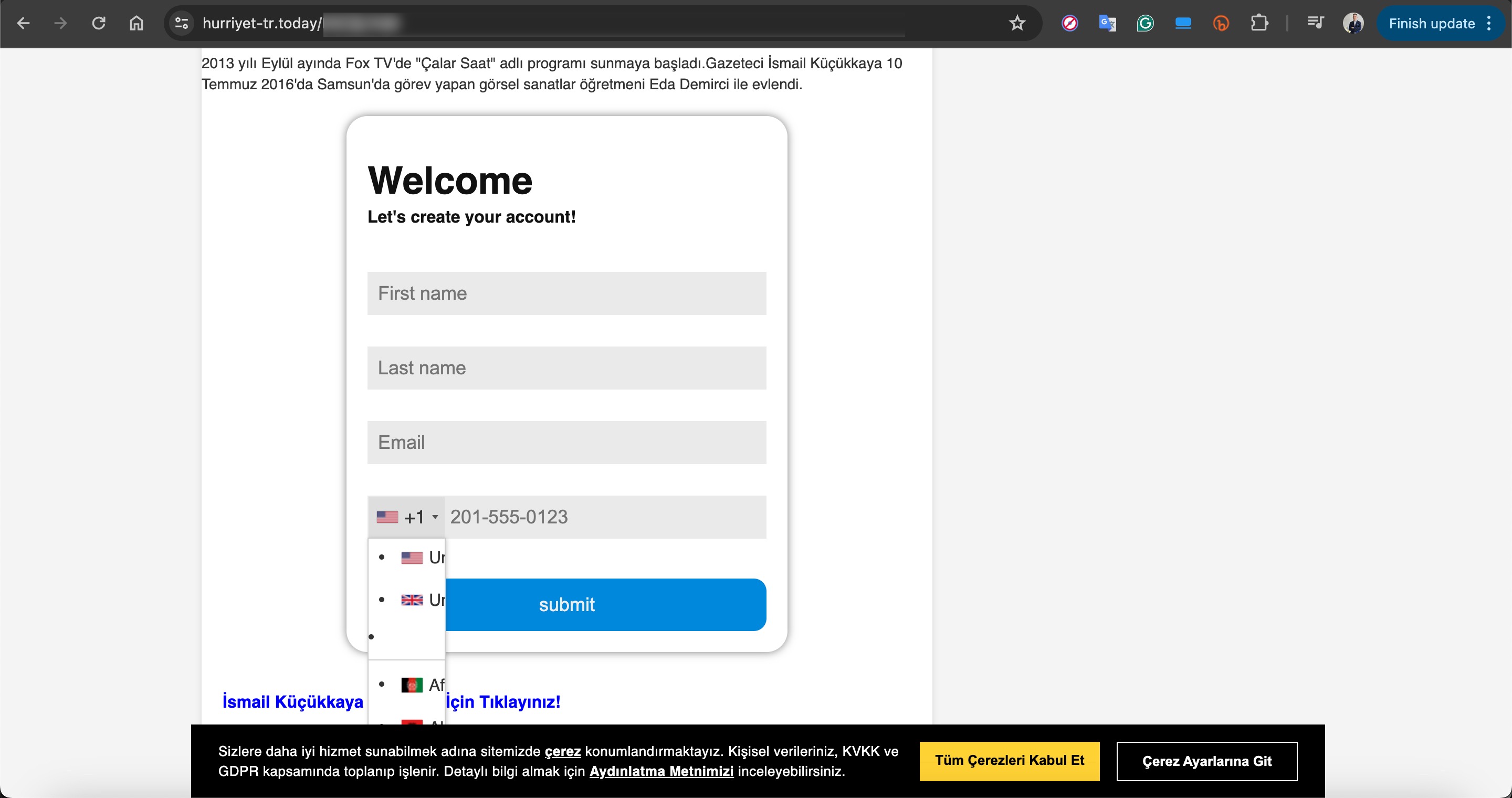

For example, in their ads targeting Turkish citizens, they used deepfake images and videos of well-known figures such as Selçuk BAYRAKTAR, Acun ILICALI, İsmail KÜÇÜKKAYA.

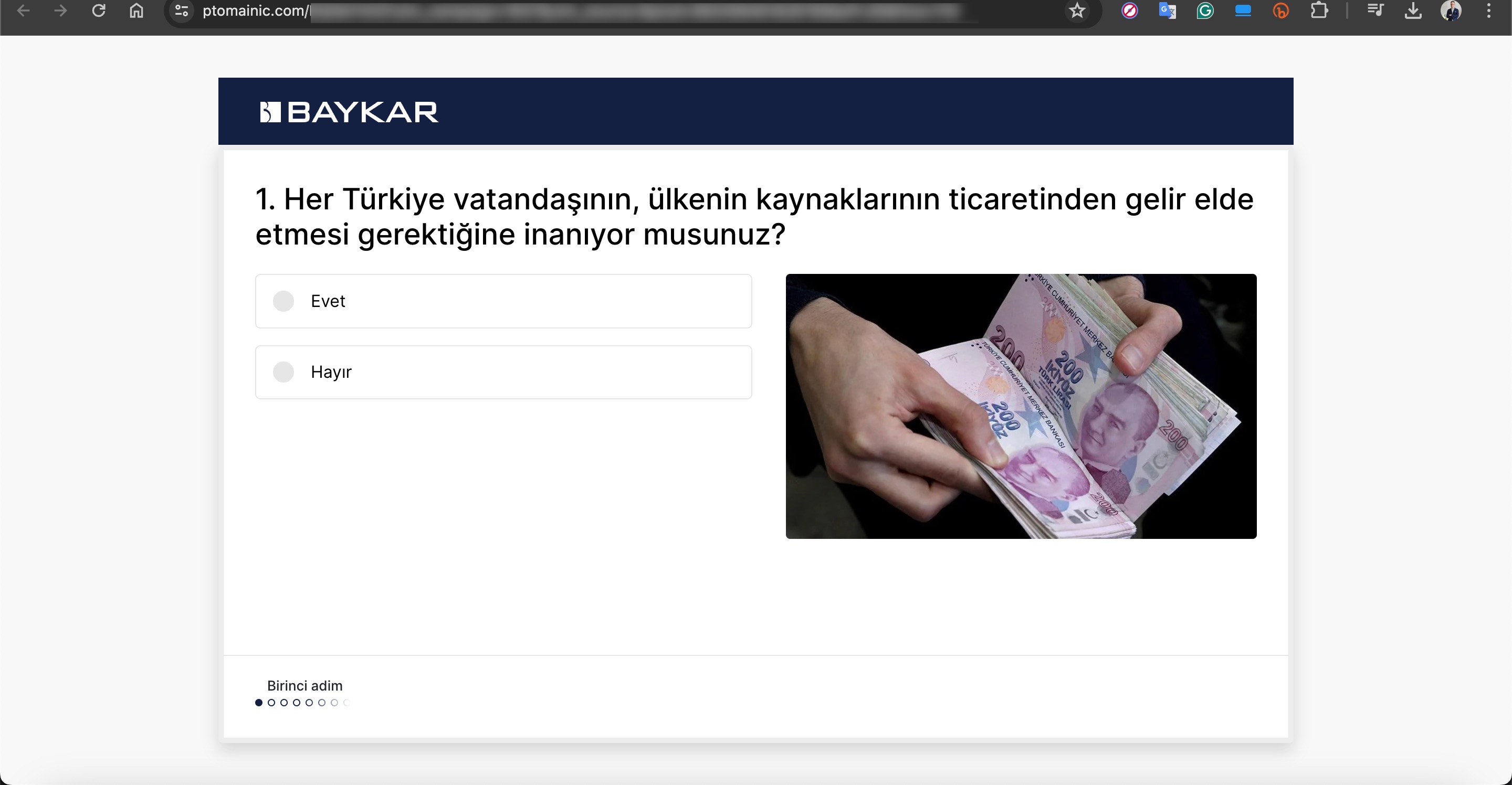

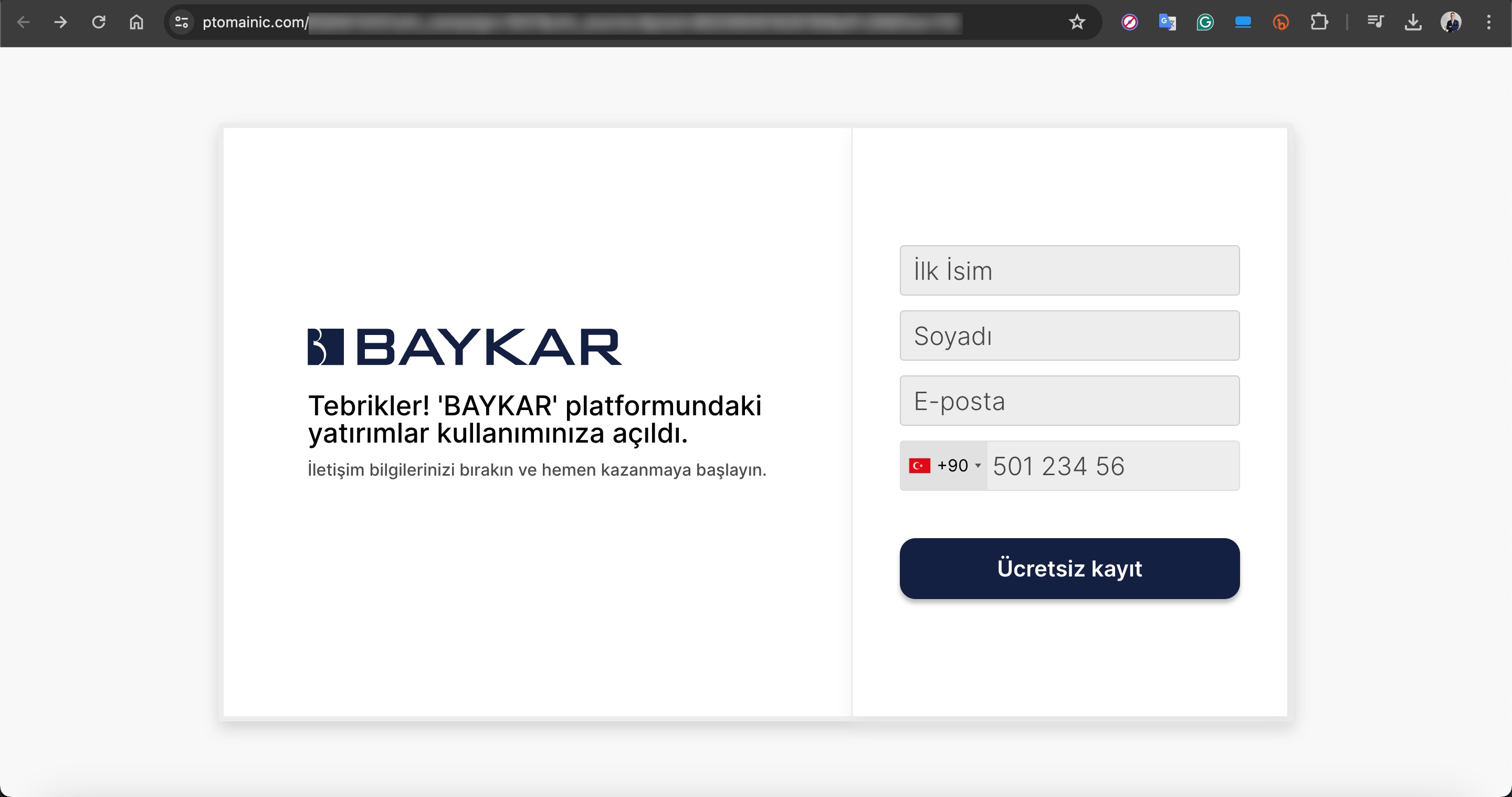

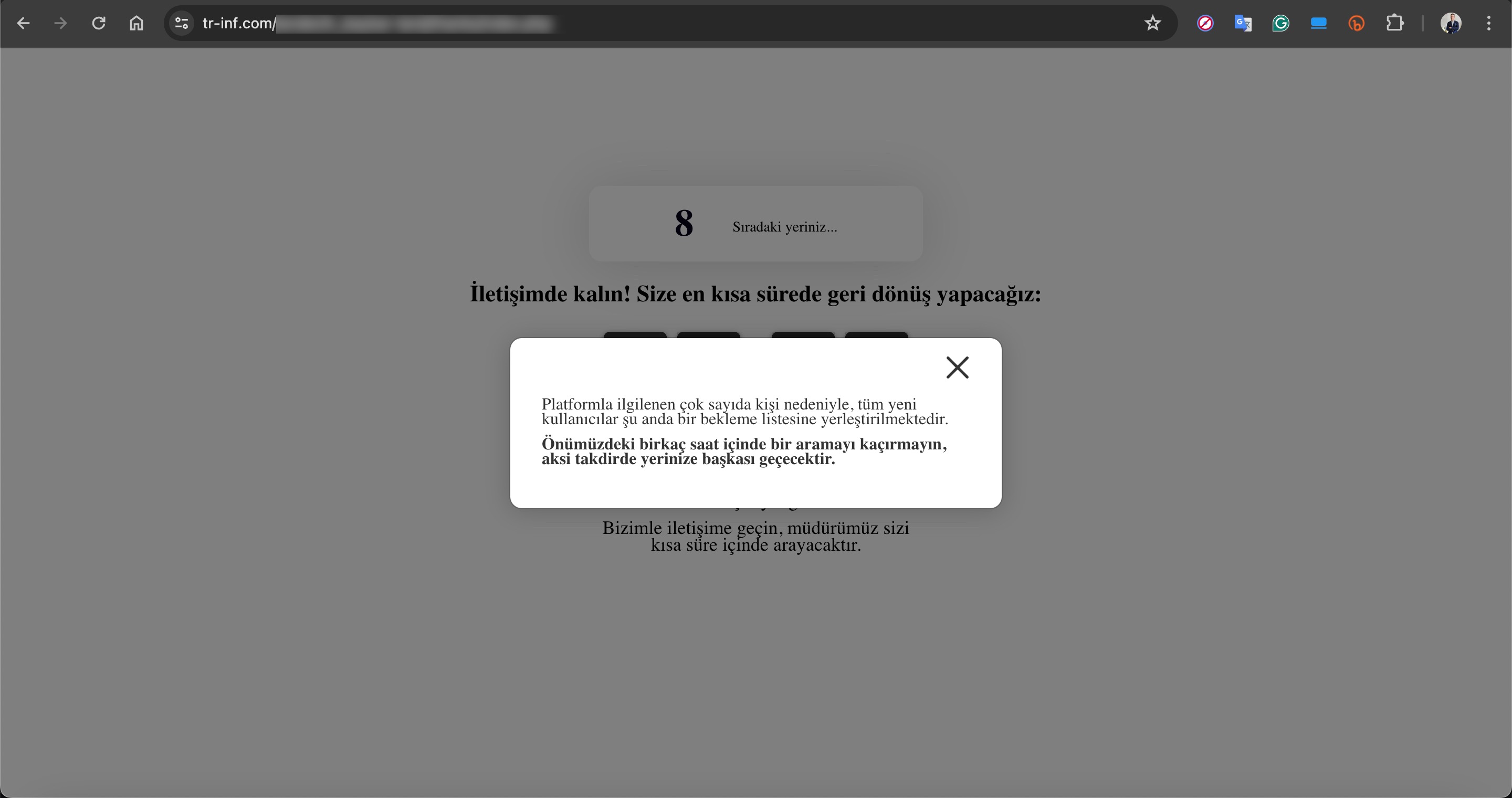

In one of the deepfake videos, it was promised that with a minimum investment of 8000 TRY, one could earn up to 100,000 TRY per month. When visiting the associated links, you would sometimes encounter a very simple form asking only for your first name, last name, email address, and mobile phone number. Other times, you would see a more visually rich form with multiple-choice options designed to gather more information, followed by a message stating that an operator would contact you shortly.

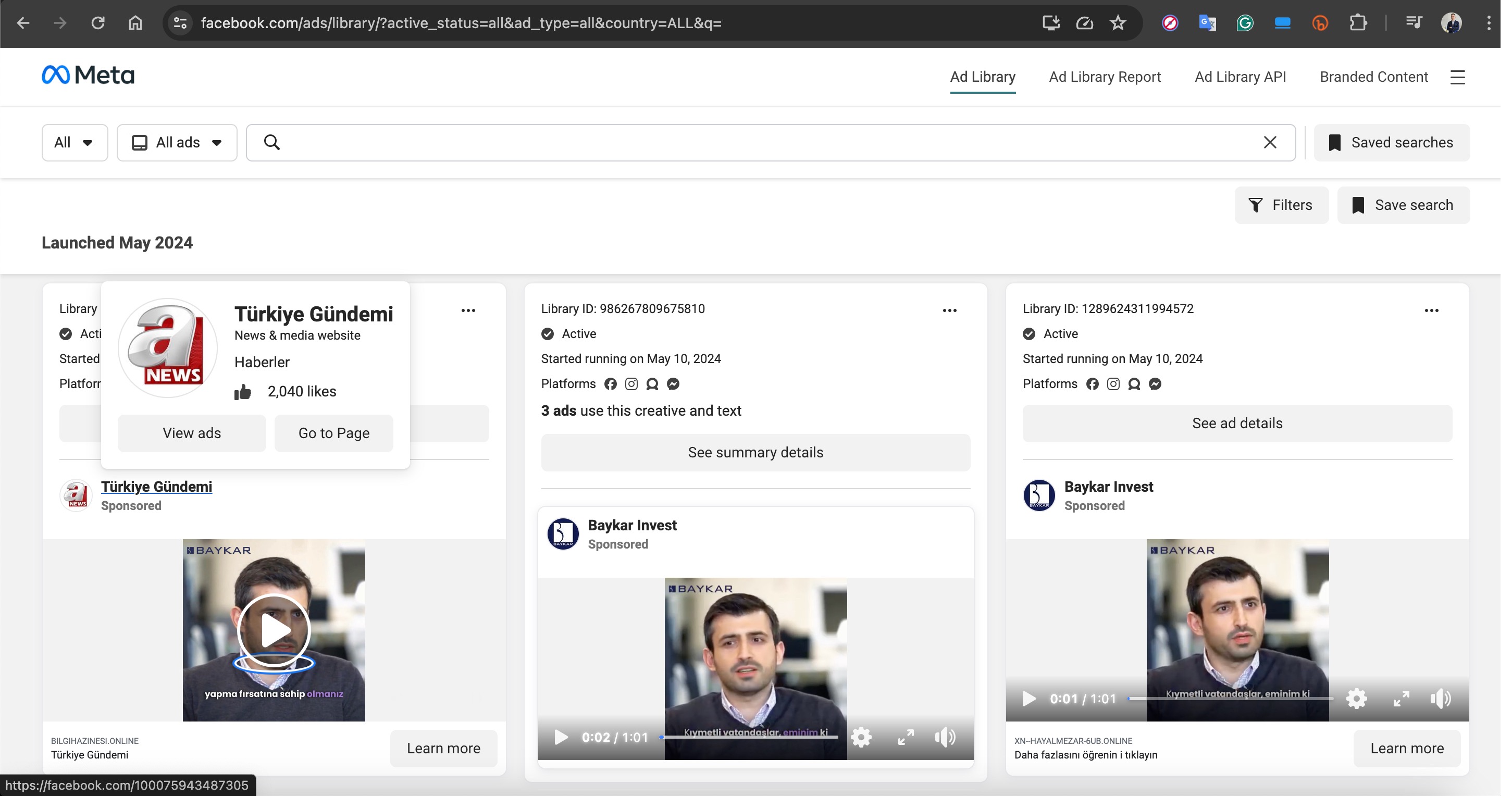

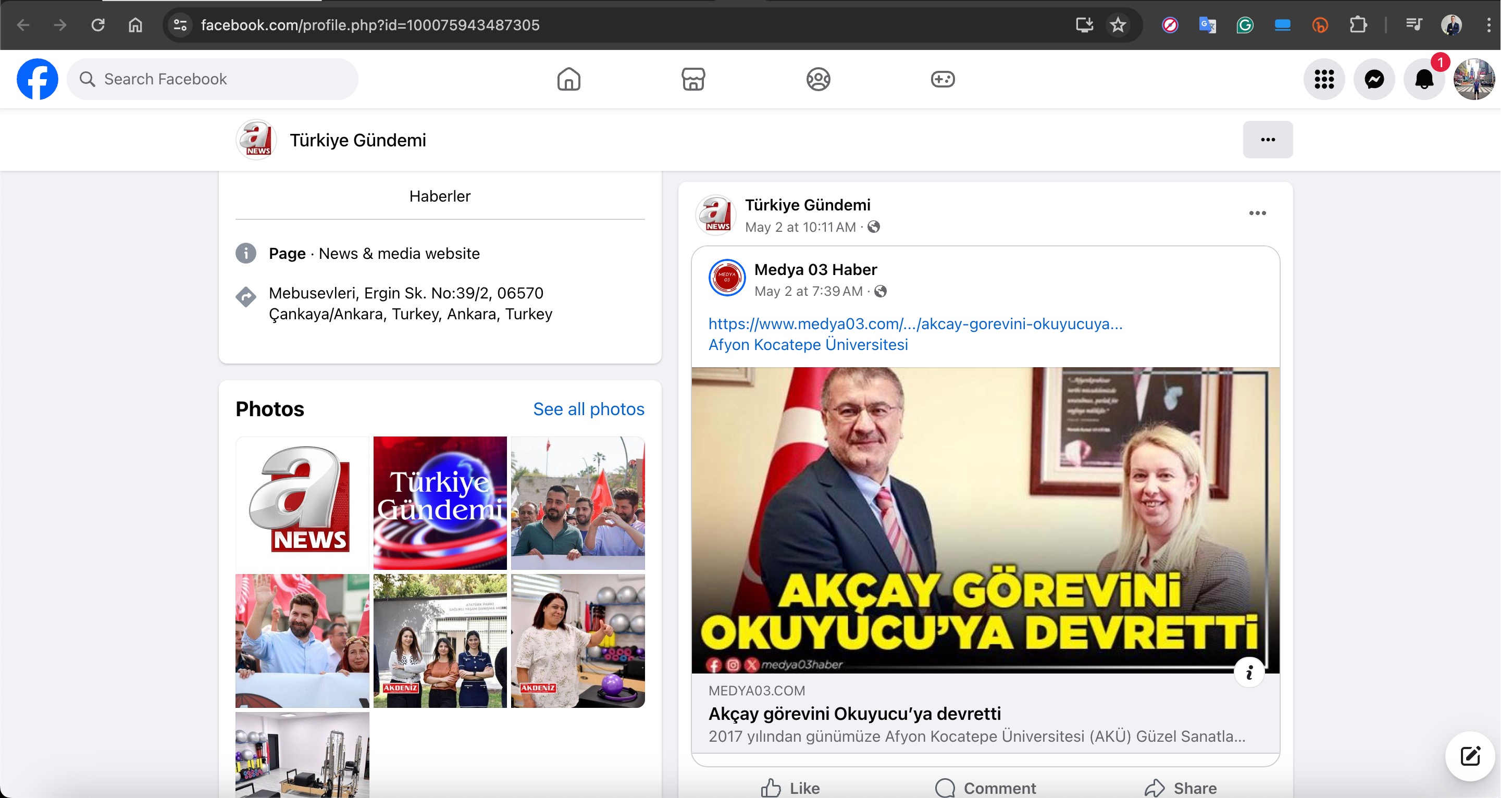

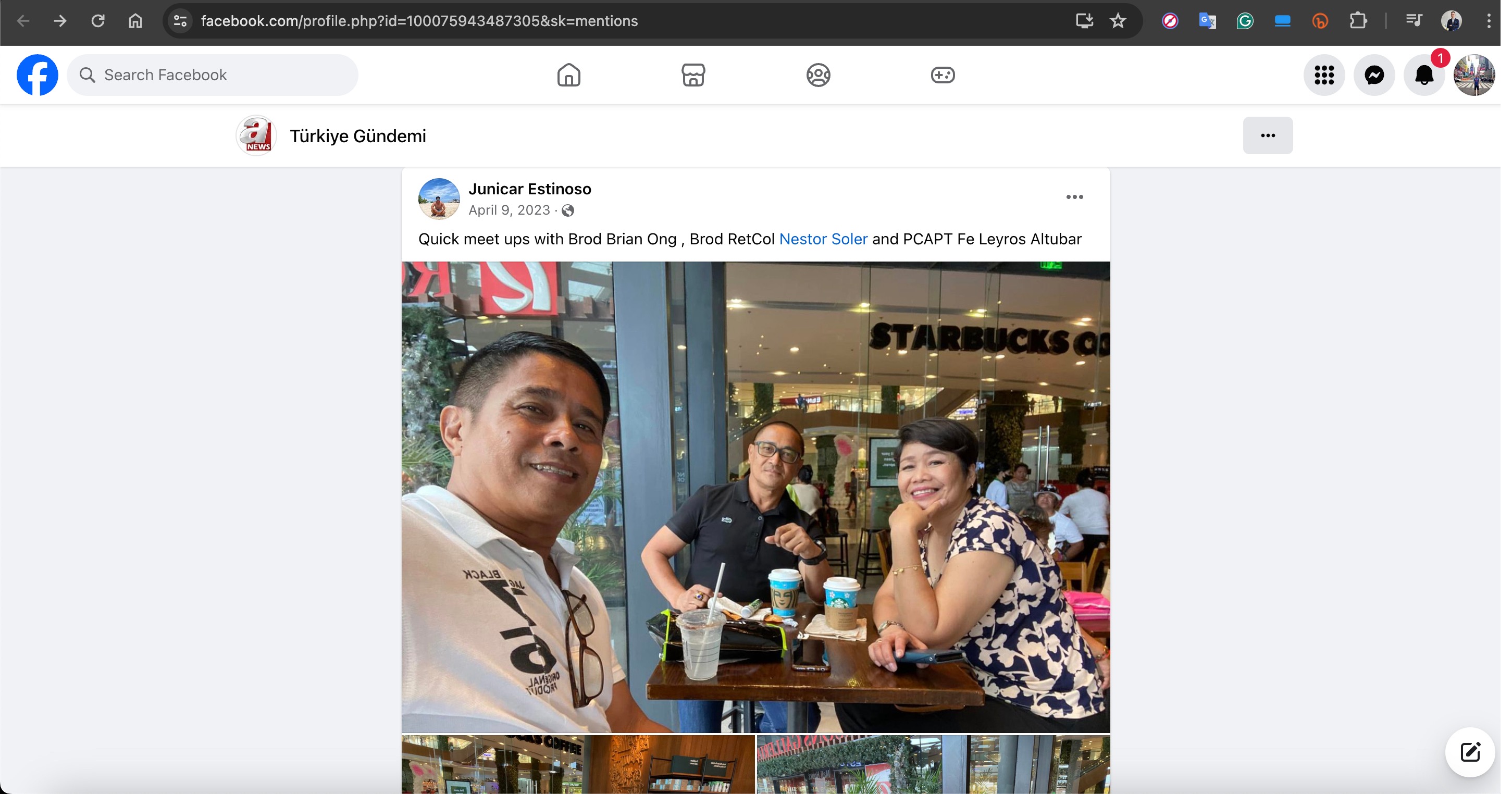

When examining the Facebook accounts used for these fake ads, it was highly likely that these accounts belonged to innocent individuals who had been hacked and repurposed for this scam. This could be easily understood by looking at the past photos and videos shared on the account.

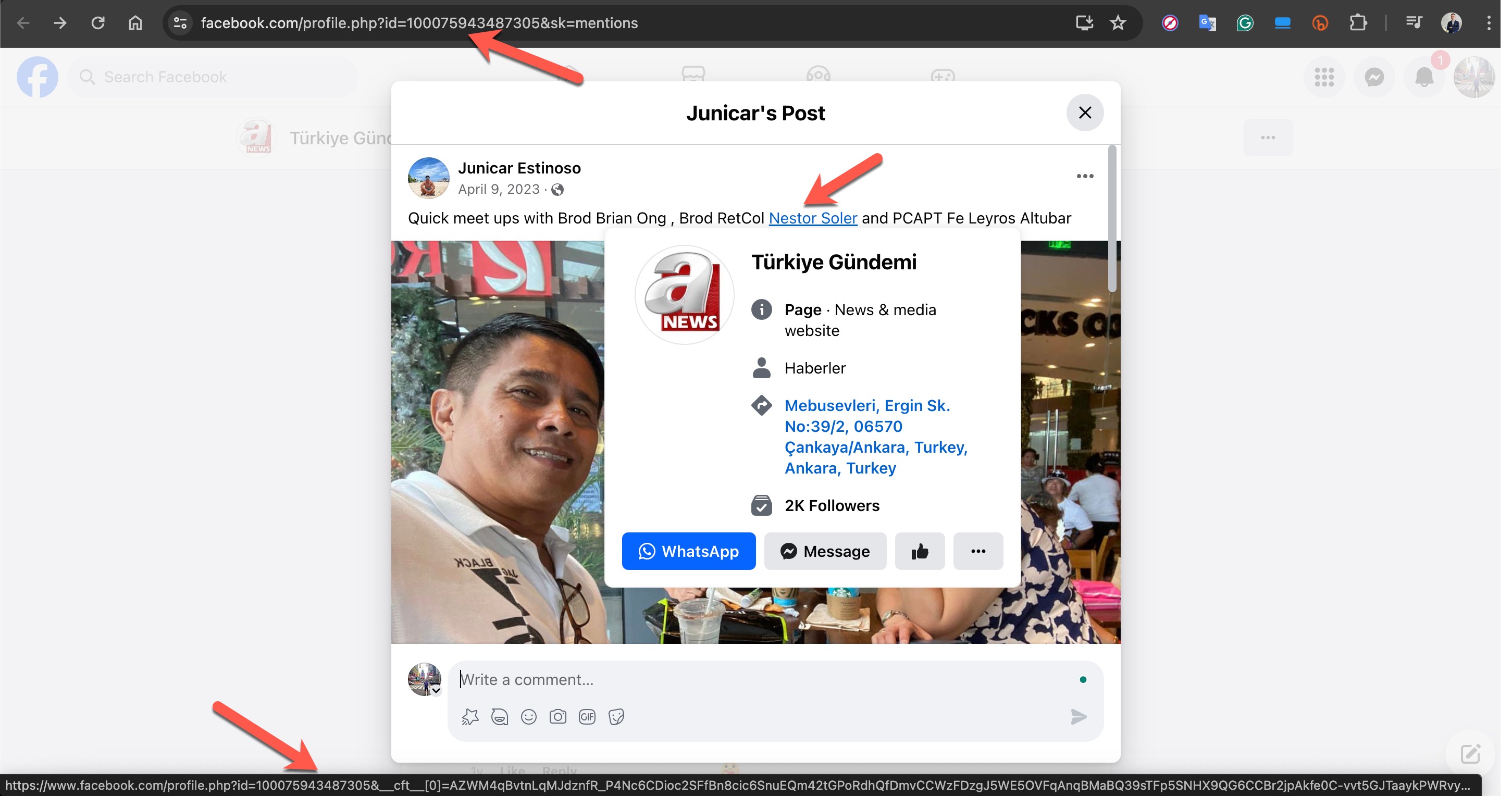

For example, the Facebook profile named “Türkiye Gündemi” in the screenshot below, which impersonated the A News channel, revealed upon inspecting the “Mentions” page that the account originally belonged to a person named Nestor Soler and had been created in 2017.

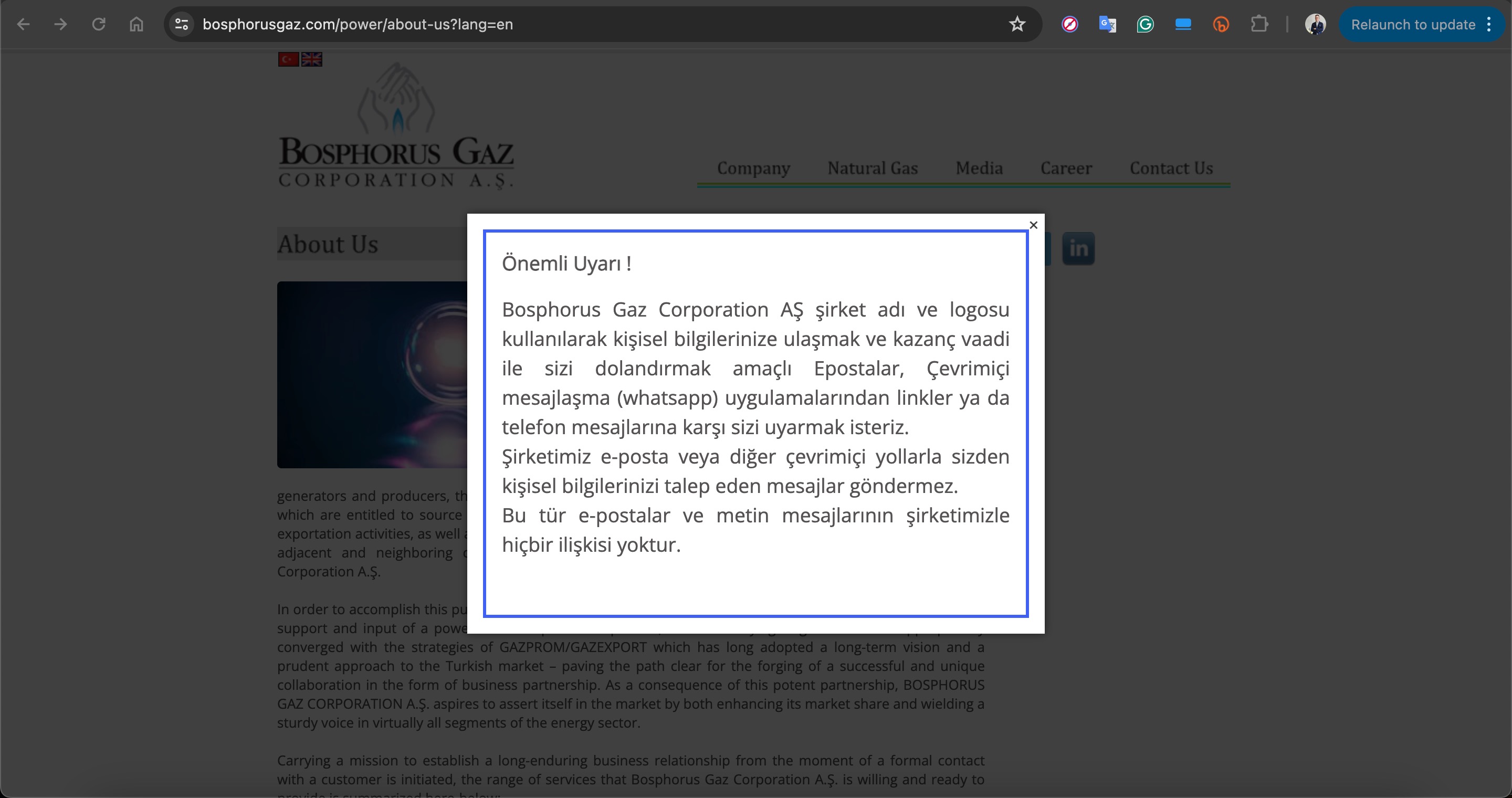

Within six months, a significant number of people in Turkey were likely targeted by this fraud method, prompting both Baykar and Bosphorus Gaz companies to issue public warnings to inform the public.

Combatting Deepfake

In the cyber world where avoiding falling victim to scams orchestrated using the latest technological advancements becomes increasingly challenging each day, how can videos suspected to be deepfakes be detected by end-users, experts, and journalists?

When it comes to detecting the aforementioned deepfake videos, it can be said that they are relatively easier to detect compared to other types of deepfakes. It is observed that academic studies are rapidly ongoing in this regard, and technology giants like Intel are implementing technologies such as FakeCatcher to address this issue. However, as accessing these corporate-oriented technologies may sometimes be impossible for end-users, the need to verify suspicious images and videos encountered by those who follow news on social media or shop online (much like the need to verify information today) becomes increasingly important day by day.

When listening to experts and authorities about what to pay attention to in order to determine whether an image or video is deepfake, the following points generally stand out:

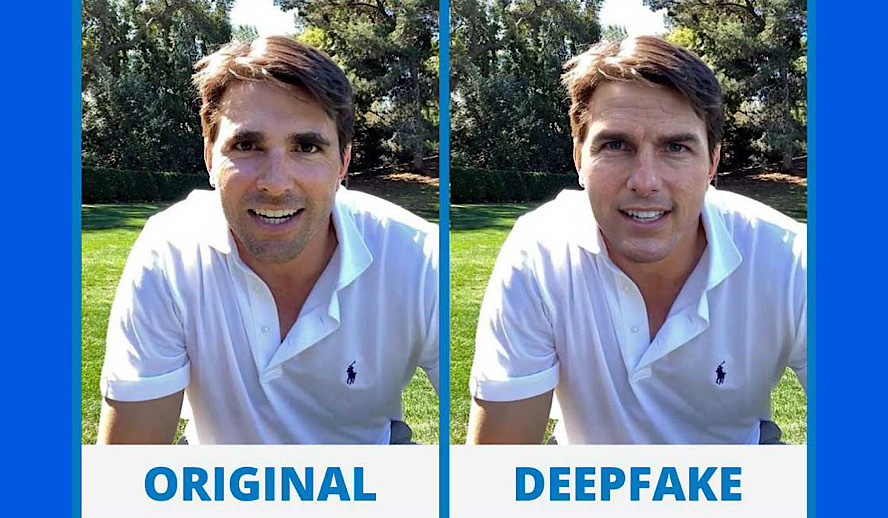

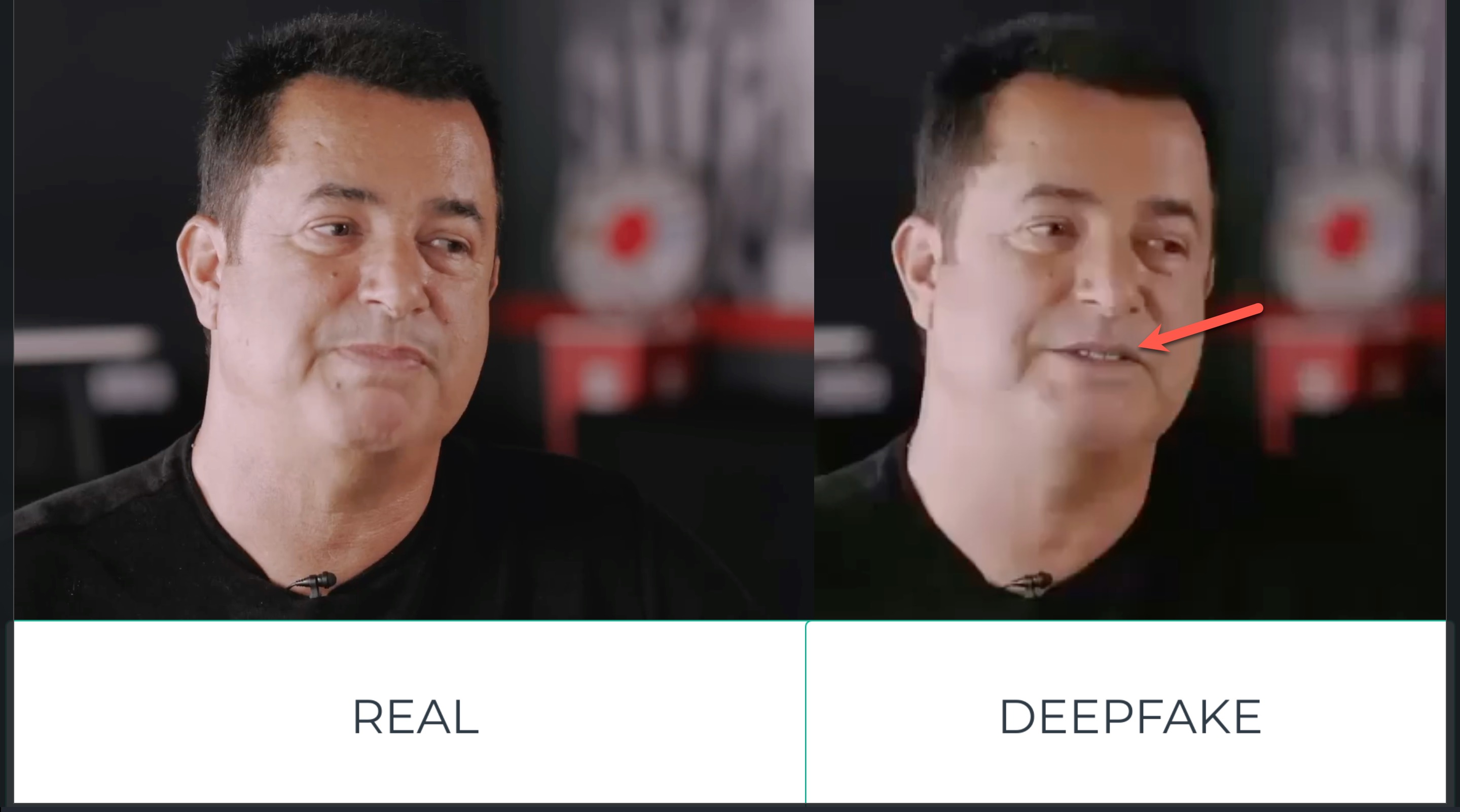

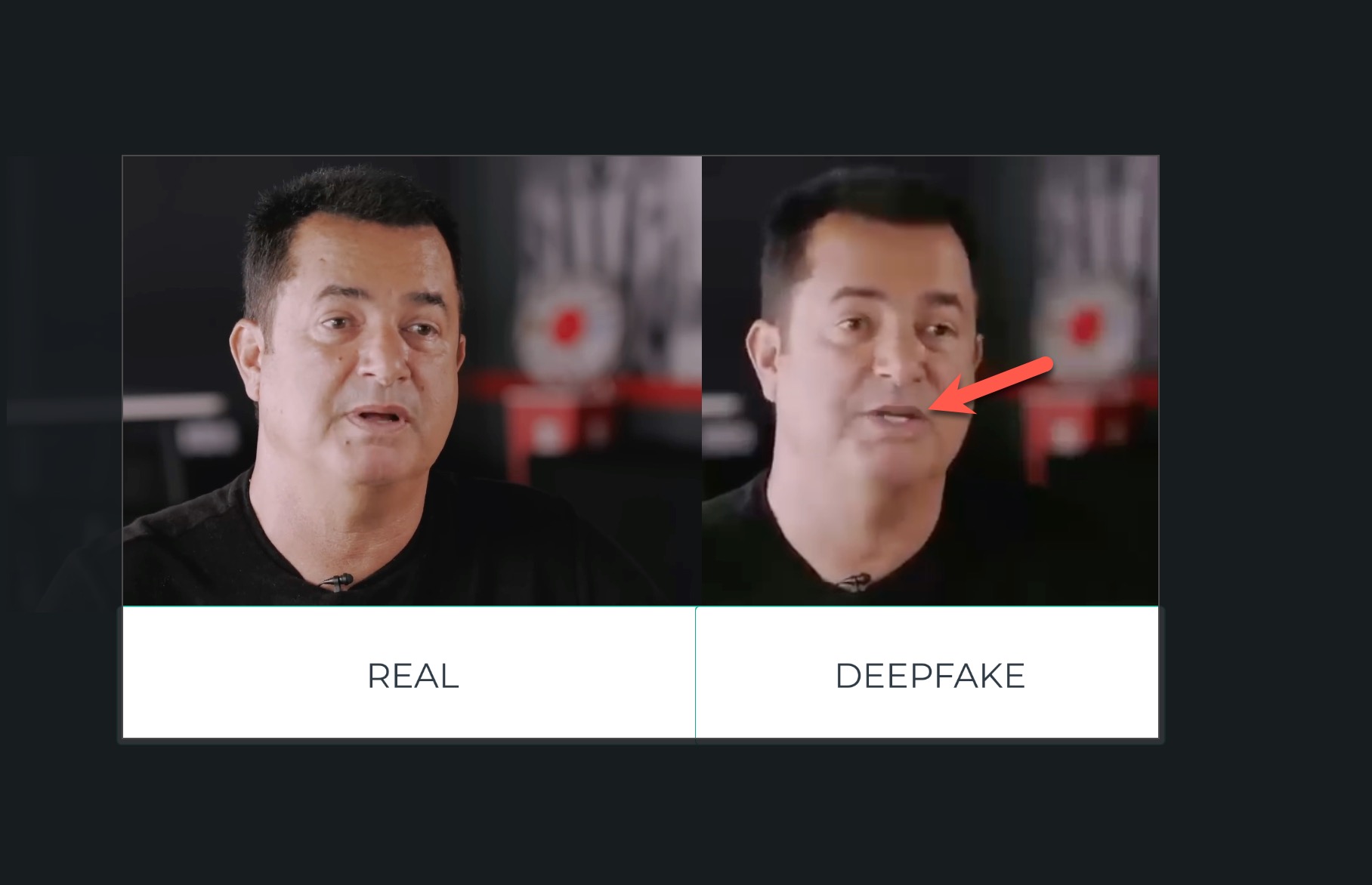

To find out if these recommendations really work, when the real video of Acun ILICALI, who is targeted by fraudsters, is compared side by side with the deepfake video, the first item in the list, “Blurring evident,” indeed stands out immediately.

Similarly, when close-up scenes are placed side by side and advanced simultaneously, inconsistencies, especially in mouth movements, facial expressions, and the visibility of teeth, also raise suspicion.

If you ask me if there are there any tools or applications that can detect these deepfake videos on your own system, I would recommend taking a look at the “Deepfake detection using Deep Learning” project.

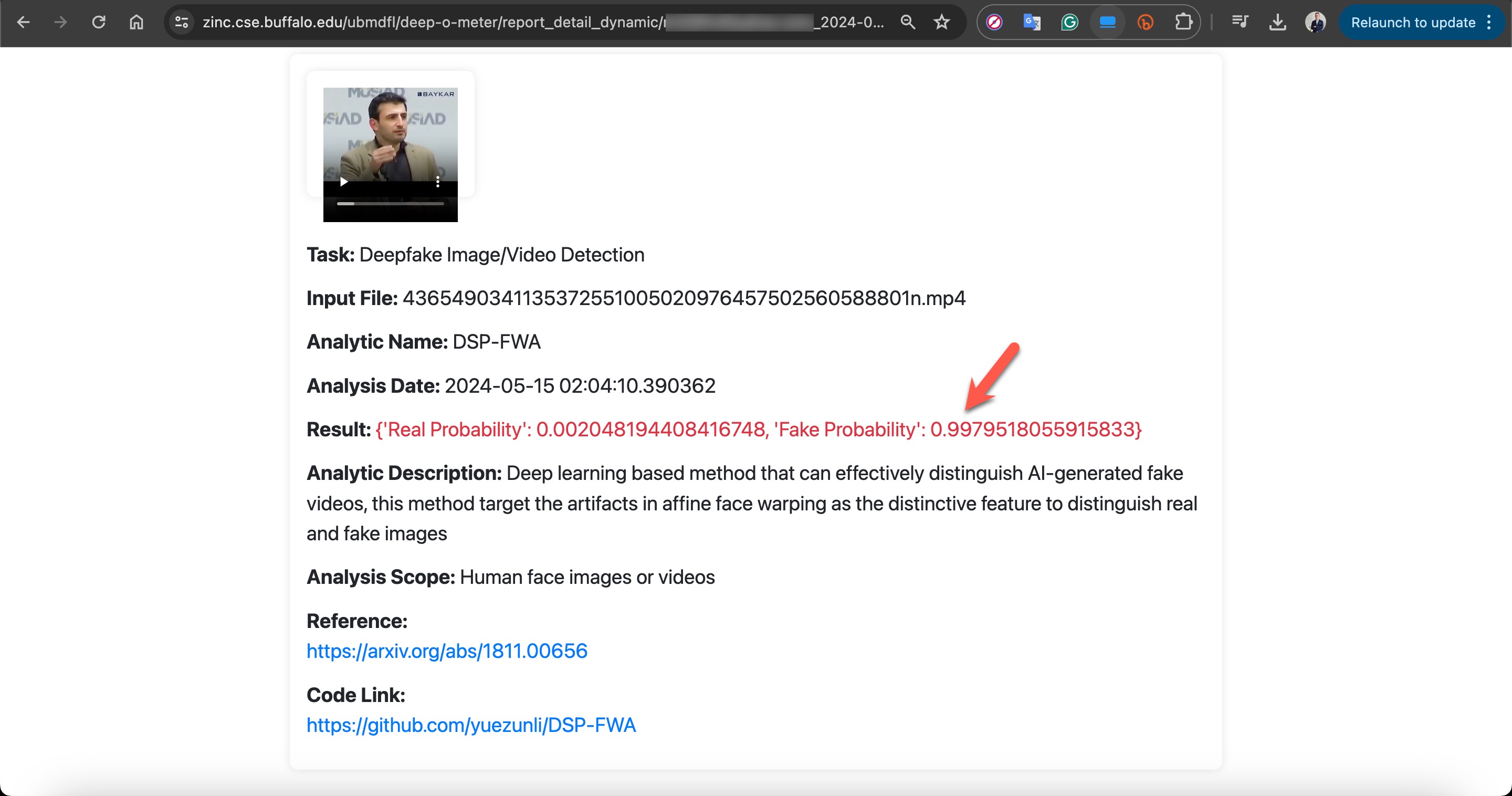

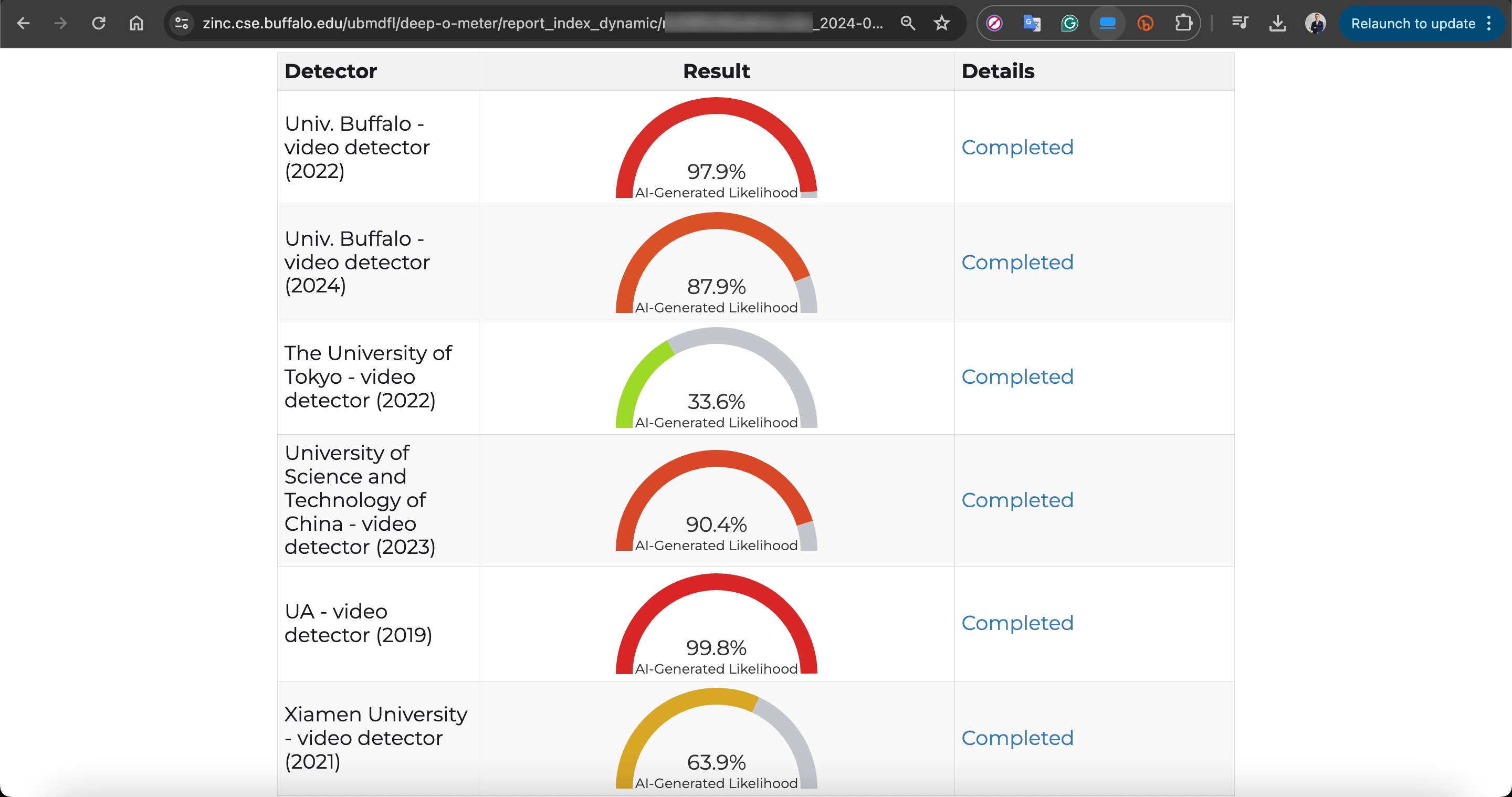

For those who want to detect deepfake videos effortlessly and for free using multiple deepfake detection algorithms based on online and academic studies, I highly recommend the DeepFake-O-Meter application.

For instance, when I analyzed a deepfake video created by scammers using excerpts from Selçuk Bayraktar’s video on DeepFake-O-Meter, most of the algorithms clearly indicated that there were inconsistencies with this video, suggesting that I should be suspicious.

For those who want to detect such deepfake images and videos by just using a browser extension, the DeepfakeProof Chrome extension could be worth trying.

How Easy to Create a Deepfake Video?

Looking at the spreading of fake advertisements using deepfake videos over the past 6 months, it was evident that the process of creating these fake videos had to be excessively easy and low-cost or even free for fraudsters. To support this thesis, when I began researching applications that could potentially be used by fraudsters to create deepfake videos, the focus shifted immediately to the Wav2Lip model, mainly due to its blurriness, use of lip-sync techniques, and, of course, being open-source and free.

Wav2Lip is a free, open-source deep learning model that can lip-sync videos to any audio track with high accuracy. It works for any language, voice, or identity, including synthetic voices and CGI faces.

When I experimented with the Wav2Lip_simplified_v5.ipynb Jupyter notebook tool on one of the videos, targeted by fraudsters, the similarities with the deepfake video (the problem of lip movements not always being synchronized with the generated audio from the text (audio deepfake)) reinforced the possibility that fraudsters were utilizing this model.

Conclusion

The groundbreaking developments in artificial intelligence continue to grease the wheels for scammers, making their jobs easier. For instance, by simply paying a monthly fee of $5 and uploading a video or audio file of the person you want to mimic to the ElevenLabs web application, you can use artificial intelligence to have any text you write voiced by that person. With this situation in mind, such services always have the potential to be abused by malicious individuals.

In the face of increasing deepfake videos on social media and networks, let’s be very, very cautious, and similarly, let’s not forget the possibility of our voices being copied (audio deepfake) and being used against our loved ones by fraudsters. It might be beneficial to already establish a keyword to be used for verification purposes in emergencies with your loved ones against the possibility of your voice being copied.

In today’s world, where we can’t believe what we hear (audio deepfake), what we see (deepfake video), or what we read (misinformation, disinformation, deepfake text), let’s not forget the age-old advice: “Don’t believe anything you haven’t seen with your own eyes.”

Hope to see you in the following articles.